In the last post Advertising IPs In EVPN Route Type 2, I described how to get IPs advertised in EVPN route type 2, but why do we need it? There are three main scenarios where having the MAC/IP mapping is useful:

- Host ARP.

- Host mobility.

- Host routing.

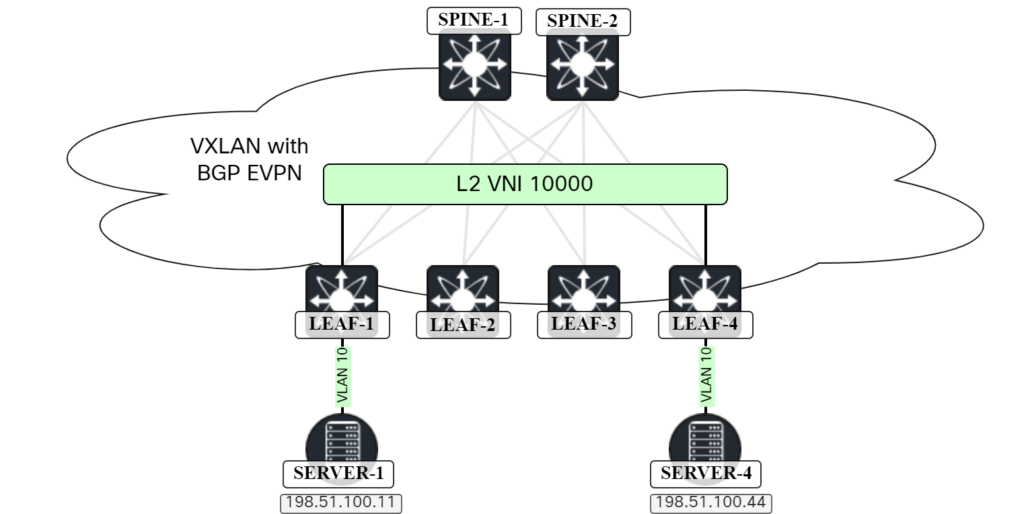

In this post I will cover the first use case and the topology below will be used:

Host ARP

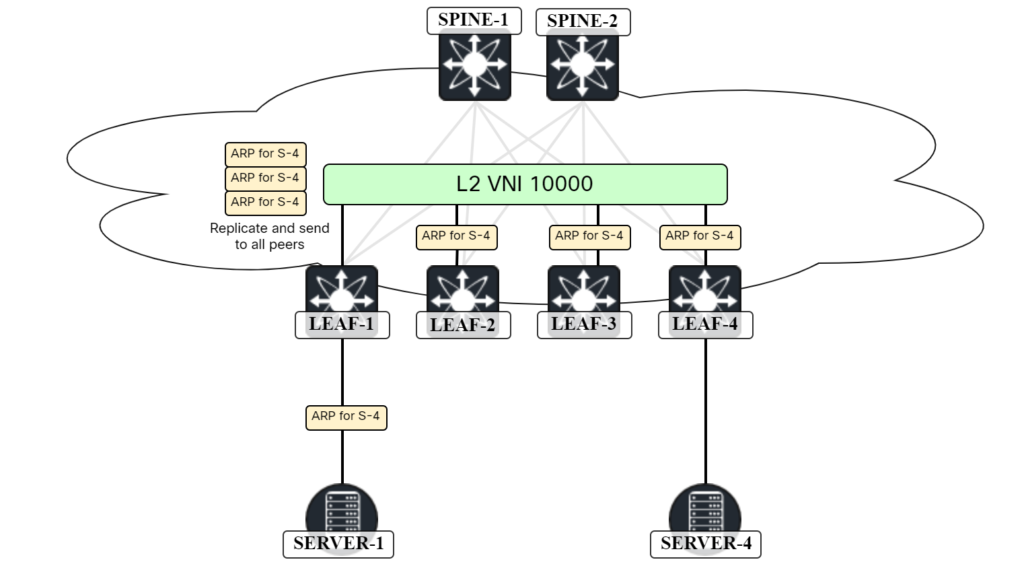

When two hosts in the same subnet want to send Ethernet frames to each other, they will ARP to discover the MAC address of the other host. This is no different in a VXLAN/EVPN network. The ARP frame, which is broadcast, will have to be flooded to other VTEPs either using multicast in the underlay or by ingress replication. Because the frame is broadcast, it will have to go to all the VTEPs that have that VNI. The scenario with ingress replication is shown below:

In this scenario, SERVER-1 is sending an ARP request to get the MAC address of SERVER-4. As all leafs are participating in the L2 VNI, LEAF-1 will perform ingress replication and send it to all leafs. However, sending the ARP request to LEAF-2 and LEAF-3 is not needed and a waste of bandwidth. Currently, there are four leafs. What if this was a pod consisting of 32 leafs? Then there would be 30 copies sent to VTEPs where SERVER-4 is not connected. The VTEPs would flood the frame but get no response. To overcome this and provide optimization, ARP suppression can be used. Before configuring this, let’s see what happens before we have ARP suppression. SERVER-1 sends an ARP request for the MAC address of SERVER-4:

Frame 456: 60 bytes on wire (480 bits), 60 bytes captured (480 bits) on interface ens194, id 8

Ethernet II, Src: 00:50:56:ad:85:06, Dst: ff:ff:ff:ff:ff:ff

Address Resolution Protocol (request)

Hardware type: Ethernet (1)

Protocol type: IPv4 (0x0800)

Hardware size: 6

Protocol size: 4

Opcode: request (1)

Sender MAC address: 00:50:56:ad:85:06

Sender IP address: 198.51.100.11

Target MAC address: 00:00:00:00:00:00

Target IP address: 198.51.100.44

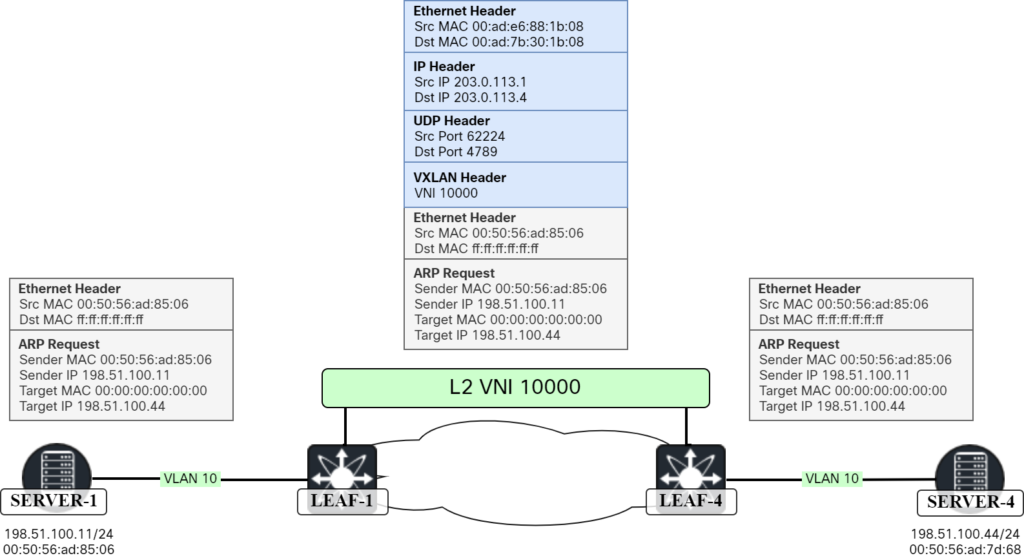

Frame is received by LEAF-1 which performs ingress replication and sends it to the other leafs. The packet towards LEAF-4 is shown below:

Frame 454: 124 bytes on wire (992 bits), 124 bytes captured (992 bits) on interface ens193, id 6

Ethernet II, Src: 00:ad:e6:88:1b:08, Dst: 00:ad:7b:30:1b:08

Internet Protocol Version 4, Src: 203.0.113.1, Dst: 203.0.113.4

User Datagram Protocol, Src Port: 62224, Dst Port: 4789

Virtual eXtensible Local Area Network

Flags: 0x0800, VXLAN Network ID (VNI)

Group Policy ID: 0

VXLAN Network Identifier (VNI): 10000

Reserved: 0

Ethernet II, Src: 00:ad:e6:88:1b:08, Dst: ff:ff:ff:ff:ff:ff

Address Resolution Protocol (request)

Hardware type: Ethernet (1)

Protocol type: IPv4 (0x0800)

Hardware size: 6

Protocol size: 4

Opcode: request (1)

Sender MAC address: 00:50:56:ad:85:06

Sender IP address: 198.51.100.11

Target MAC address: 00:00:00:00:00:00

Target IP address: 198.51.100.44

This is also shown visually below:

Now that we understand what is going on without any optimization, let’s look at enabling ARP suppression.

It’s important to note that there must be a SVI to be able to learn what IP is associated with a MAC. Without an SVI the switch would not be able to snoop any ARP frames. This is currently the ARP cache of LEAF-1:

Leaf1# show ip arp vrf Tenant1

Flags: * - Adjacencies learnt on non-active FHRP router

+ - Adjacencies synced via CFSoE

# - Adjacencies Throttled for Glean

CP - Added via L2RIB, Control plane Adjacencies

PS - Added via L2RIB, Peer Sync

RO - Re-Originated Peer Sync Entry

D - Static Adjacencies attached to down interface

IP ARP Table for context Tenant1

Total number of entries: 1

Address Age MAC Address Interface Flags

198.51.100.11 00:09:47 0050.56ad.8506 Vlan10

Let’s enable ARP suppression on the L2 VNI:

Leaf1(config)# int nve1 Leaf1(config-if-nve)# member vni 10000 Leaf1(config-if-nve-vni)# suppress-arp ERROR: Please configure TCAM region for Ingress ARP-Ether ACL before configuring ARP supression.

As can be seen above, the TCAM needs to be sliced to enable ARP suppression:

Leaf1(config)# hardware access-list tcam region arp-ether 256 double-wide ERROR: Aggregate TCAM region configuration exceeded the available Ingress TCAM slices. Please re-configure.

However, no resources are available. We need to reconfigure the slicing. Let’s see what the current slicing looks like:

Leaf1# show hardware access-list tcam region

IPV4 PACL [ifacl] size = 0

IPV6 PACL [ipv6-ifacl] size = 0

MAC PACL [mac-ifacl] size = 0

IPV4 Port QoS [qos] size = 0

IPV6 Port QoS [ipv6-qos] size = 0

MAC Port QoS [mac-qos] size = 0

FEX IPV4 PACL [fex-ifacl] size = 0

FEX IPV6 PACL [fex-ipv6-ifacl] size = 0

FEX MAC PACL [fex-mac-ifacl] size = 0

FEX IPV4 Port QoS [fex-qos] size = 0

FEX IPV6 Port QoS [fex-ipv6-qos] size = 0

FEX MAC Port QoS [fex-mac-qos] size = 0

IPV4 VACL [vacl] size = 0

IPV6 VACL [ipv6-vacl] size = 0

MAC VACL [mac-vacl] size = 0

IPV4 VLAN QoS [vqos] size = 0

IPV6 VLAN QoS [ipv6-vqos] size = 0

MAC VLAN QoS [mac-vqos] size = 0

IPV4 RACL [racl] size = 1536

IPV6 RACL [ipv6-racl] size = 0

IPV4 Port QoS Lite [qos-lite] size = 0

FEX IPV4 Port QoS Lite [fex-qos-lite] size = 0

IPV4 VLAN QoS Lite [vqos-lite] size = 0

IPV4 L3 QoS Lite [l3qos-lite] size = 0

Egress IPV4 QoS [e-qos] size = 0

Egress IPV6 QoS [e-ipv6-qos] size = 0

Egress MAC QoS [e-mac-qos] size = 0

Egress IPV4 VACL [vacl] size = 0

Egress IPV6 VACL [ipv6-vacl] size = 0

Egress MAC VACL [mac-vacl] size = 0

Egress IPV4 RACL [e-racl] size = 768

Egress IPV6 RACL [e-ipv6-racl] size = 0

Egress IPV4 QoS Lite [e-qos-lite] size = 0

IPV4 L3 QoS [l3qos] size = 256

IPV6 L3 QoS [ipv6-l3qos] size = 0

MAC L3 QoS [mac-l3qos] size = 0

Ingress System size = 256

Egress System size = 256

SPAN [span] size = 256

Ingress COPP [copp] size = 256

Ingress Flow Counters [flow] size = 0

Egress Flow Counters [e-flow] size = 0

Ingress SVI Counters [svi] size = 0

Redirect [redirect] size = 256

VPC Convergence/ES-Multi Home [vpc-convergence] size = 512

IPSG SMAC-IP bind table [ipsg] size = 0

Ingress ARP-Ether ACL [arp-ether] size = 0

ranger+ IPV4 QoS Lite [rp-qos-lite] size = 0

ranger+ IPV4 QoS [rp-qos] size = 256

ranger+ IPV6 QoS [rp-ipv6-qos] size = 256

ranger+ MAC QoS [rp-mac-qos] size = 256

NAT ACL[nat] size = 0

Mpls ACL size = 0

MOD RSVD size = 0

sFlow ACL [sflow] size = 0

mcast bidir ACL [mcast_bidir] size = 0

Openflow size = 0

(null) size = 0

Openflow Lite [openflow-lite] size = 0

Ingress FCoE Counters [fcoe-ingress] size = 0

Egress FCoE Counters [fcoe-egress] size = 0

Redirect-Tunnel [redirect-tunnel] size = 0

SPAN+sFlow ACL [span-sflow] size = 0

Openflow IPv6 [openflow-ipv6] size = 0

mcast performance ACL [mcast-performance] size = 0

Mpls Double Width ACL size = 0

N9K ARP ACL [n9k-arp-acl] size = 0

N3K V6 Span size = 0

N3K V6 L2 Span size = 0

Ingress Span size = 0

Redirect v4 size = 0

Redirect v6 size = 0

Fretta Nbm size = 0

vxlan p2p ACL [vxlan-p2p] size = 0

Vxlan Feature size = 0

(null) size = 0

Ingress VLAN QoS [vqos] size = 0

Egress VLAN QoS [e-vqos] size = 0

Ingress PACL v4 & v6 [ifacl-all] size = 0

Ingress RACL v4 & v6 [racl-all] size = 0

Ingress mVPN [ing-mvpn] size = 0

Ingress QoS L2/L3 [ing-l2-l3-qos] size = 0

HW Telemetry [hw-telemetry] size = 0

Egress PACL v4 [e-ifacl] size = 0

Egress PACL v6 [e-ifacl] size = 0

Egress PACL v4 v6 [e-ifacl-all] size = 0

Egress HW Telemetry [e-hw-telemetry] size = 0

Let’s take some TCAM from RACL by changing it from 1536 bytes to 512 bytes:

Leaf1(config)# hardware access-list tcam region racl 512 Warning: Please save config and reload the system for the configuration to take effect

Note that this will require a reload. After the reload, it’s possible to carve out TCAM for ARP suppression:

Leaf1(config)# hardware access-list tcam region arp-ether 256 double-wide Warning: Please save config and reload the system for the configuration to take effect

It does require another reload. Note that this feature requires double-wide allocation which means it needs a minimum of two slices. A slice can be 256 or 512 bytes. 512 bytes have now been allocated to ARP suppression:

Leaf1# show hardware access-list tcam region | i arp-ether

Ingress ARP-Ether ACL [arp-ether] size = 256 (double-wide)

To understand more about TCAM slicing, refer to Understand how to Carve Nexus 9000 TCAM Space.

It’s now possible to configure ARP suppression under the VNI:

Leaf1(config)# int nve1 Leaf1(config-if-nve)# member vni 10000 Leaf1(config-if-nve-vni)# suppress-arp

With this configured, the switch will snoop ARP frames for the L2 VNI. Any local MAC/IP mappings will be learned by snooping incoming ARP frames. For remote endpoints, this information is learned via EVPN route type 2 which will contain the MAC and IP address of the endpoint. It’s important to note that the switch is not performing Proxy ARP where it is responding with its own MAC address of the SVI, but rather sending an ARP response with the information of the endpoint that was requested.

Let’s check what the ARP suppression cache of LEAF-1 looks like:

Leaf1# show ip arp suppression-cache detail

Flags: + - Adjacencies synced via CFSoE

L - Local Adjacency

R - Remote Adjacency

L2 - Learnt over L2 interface

PS - Added via L2RIB, Peer Sync

RO - Dervied from L2RIB Peer Sync Entry

Ip Address Age Mac Address Vlan Physical-ifindex Flags Remote Vtep Addrs

198.51.100.11 00:13:24 0050.56ad.8506 10 Ethernet1/3 L

198.51.100.44 19:40:11 0050.56ad.7d68 10 (null) R 203.0.113.4

The entry for 198.51.100.11 is a local one as indicated by the L. The entry for 198.51.100.44 is remote as indicated by the R. It also shows what the remote VTEP is. If 198.51.100.11 sends an ARP request for 198.51.100.44, LEAF-1 will respond to this request. Let’s verify this by clearing the ARP cache on SERVER-1 and then sending an ICMP Echo towards SERVER-4:

sudo ip neighbor flush all server1:~$ ping 198.51.100.44 PING 198.51.100.44 (198.51.100.44) 56(84) bytes of data. 64 bytes from 198.51.100.44: icmp_seq=1 ttl=64 time=4.69 ms

This is the ARP response that was captured:

Frame 2: 60 bytes on wire (480 bits), 60 bytes captured (480 bits) on interface ens194, id 8

Ethernet II, Src: 00:50:56:ad:7d:68, Dst: 00:50:56:ad:85:06

Destination: 00:50:56:ad:85:06

Source: 00:50:56:ad:7d:68

Type: ARP (0x0806)

Padding: 000000000000000000000000000000000000

Address Resolution Protocol (reply)

Hardware type: Ethernet (1)

Protocol type: IPv4 (0x0800)

Hardware size: 6

Protocol size: 4

Opcode: reply (2)

Sender MAC address: 00:50:56:ad:7d:68

Sender IP address: 198.51.100.44

Target MAC address: 00:50:56:ad:85:06

Target IP address: 198.51.100.11

Notice that the switch created a frame with an Ethernet source address of SERVER-4 and sent it to SERVER-1.

Running some debugs for ARP suppression shows how the cache was populated from an EVPN route:

arp: arp_l2rib_register_for_remote_routes: Request for remote route: Registration=TRUE, Result=Success, VLAN=10, Producer=5 arp: arp_l2rib_msg_cb: (Type=Route) Length=224, RTFlag=0, Sequence=0, Delete=0, Producer=5, PeerID=0, MAC=0050.56ad.7d68, RemotehostIP=198.51.100.44, AFI=2 arp: arp_l2rib_msg_cb: hostIP=198.51.100.44, RemoteVtepAddrCount=1 arp: arp_l2rib_msg_cb: RNHs=203.0.113.4 arp: arp_cache_create_cache_node: hostIP=198.51.100.44, RemoteVtepAddrCount=1 arp: arp_cache_create_cache_node: RNHs=203.0.113.4

It is the following route that is being used to populate the ARP suppression cache:

Route Distinguisher: 192.0.2.6:32777

BGP routing table entry for [2]:[0]:[0]:[48]:[0050.56ad.7d68]:[32]:[198.51.100.44]/272, version 220

Paths: (2 available, best #2)

Flags: (0x000202) (high32 00000000) on xmit-list, is not in l2rib/evpn, is not in HW

Path type: internal, path is valid, not best reason: Neighbor Address, no labeled nexthop

AS-Path: NONE, path sourced internal to AS

203.0.113.4 (metric 81) from 192.0.2.12 (192.0.2.2)

Origin IGP, MED not set, localpref 100, weight 0

Received label 10000 10001

Extcommunity: RT:65000:10000 RT:65000:10001 ENCAP:8 Router MAC:00ad.7083.1b08

Originator: 192.0.2.6 Cluster list: 192.0.2.2

Advertised path-id 1

Path type: internal, path is valid, is best path, no labeled nexthop

Imported to 3 destination(s)

Imported paths list: Tenant1 L3-10001 L2-10000

AS-Path: NONE, path sourced internal to AS

203.0.113.4 (metric 81) from 192.0.2.11 (192.0.2.1)

Origin IGP, MED not set, localpref 100, weight 0

Received label 10000 10001

Extcommunity: RT:65000:10000 RT:65000:10001 ENCAP:8 Router MAC:00ad.7083.1b08

Originator: 192.0.2.6 Cluster list: 192.0.2.1

Path-id 1 not advertised to any peer

In this post we saw how knowing the MAC/IP of an endpoint through the use of EVPN type 2 routes can optimize ARP flooding in a VXLAN network by using the ARP suppression feature. The cache is populated by local learning and by importing information from EVPN. When there is a hit in the cache, the local switch creates an ARP response using information from the cache and populates the ARP response with all the correct fields, including the Ethernet source MAC address. If there is a miss in the cache, the frame is flooded as normally using ingress replication or multicast. In future posts we’ll look at some of the other use cases for having IPs in EVPN route type 2.

Hi Daniel,

You write:

> It’s important to note that there must be a SVI to be able to learn what IP is associated with a MAC. Without an SVI the switch would not be able to snoop any ARP frames.

I understand if this is a limitation on a given platform, but I can’t wrap my head around why this would be a universal technical limitation. Assuming with SVI you mean a reachable IP address on the same L2 segment, that is. I don’t understand why the data plane simply can’t snoop ARP and equivalent passing through without the need of an IP address in the same segment.

Am I missing something?

// bc

Hi bc. Nice to hear from you 🙂

Sure, you could snoop ARP frames without having an SVI, but then you would need to snoop all ARP frames and not only the ones destined to MAC address of the SVI. In addition the SVI is needed for any L3 forwarding, otherwise the L2 segment would be isolated. There could be scenarios where that is desirable, of course, and then you could skip configuring the SVI.

Hi Daniel and bc,

I would like to point out that is possible to use ARP suppresion without active SVI… I do have such scenario within mixed (N9300 + N5600) topology. There are (valid and imported) MAC-IP evpn type 2 routes without L3 characteristics like second mpls label, L3VNI label, etc. That route has identical characteristic like MAC one + IP address. Another interesting detail is that output of the command “show ip arp suppression-cache detail ” lists “Flags” as “L2” and not “L”.

Of course – that scenario is only for migration and has L3 gateway out of the fabric.

That’s interesting! Must be one of those scenarios that work, but aren’t supported, as Cisco mention in the docs that you need to have the anycast gateway (SVI).

Yes, I think that this is tricky part. I was wondering how does it can/dare work? Because according to cisco vxlan guide:

“ARP suppression is only supported for a VNI if the VTEP hosts the First-Hop Gateway (Distributed Anycast Gateway) for this VNI. The VTEP and the SVI for this VLAN have to be properly configured for the distributed Anycast Gateway operation, for example, global Anycast Gateway MAC address configured and Anycast Gateway feature with the virtual IP address on the SVI”

I have “DAG-related config parts” configured but respective SVIs for L2VNI are in “admin shutdown” state. – Ready for migration scenario :-). So I have to confess that this “working scenario” was rather unintentional. I have to simulate this in my lab to know more… If N9000v have same behavior as “real” HW one.

Another interesting fact is that arp suppresion feature enables hosts to work within L2VNI VXLAN segment/network even if underlay multicast is broken (N5K does not support IR so multicast is mandatory). In other words – no support for BUM traffic for that VNI! For SOME workload/VM is obviously support for “simple” arp enough.

I do have still some questions (or topics to study) regarding suppressing arp – are there some timers? Because I can see some “presudo stale” entries with age more than 2 years with APIPA IP adresses. At first glance it does not make sense to not age them out, simply becase of TCAM depletion resources.