There are two main methods that can be used to forward broadcast, unknown unicast, and multicast (BUM) frames in a VXLAN network:

- Ingress replication.

- Multicast in underlay.

In this post, we take a detailed look at how multicast can be used to forward BUM frames by running multicast in the underlay. We are using the topology from my Building a VXLAN Lab Using Nexus9000v post. The Spine switches are configured using the Nexus feature Anycast RP. That is, no MSDP is used between the RPs. To be able to forward broadcast frames such as ARP in our topology, the following is required:

- The VTEPs must signal that they want to join the shared tree for 239.0.0.1 (to receive multicast) using a PIM Join.

- The VTEPs must signal that they intend to send multicast for 239.0.0.1 on the source tree using a PIM Register.

- The RPs must share information about the sources that they know of by forwarding PIM Register messages.

- The VTEP must encapsulate ARP packets in VXLAN and forward in the underlay using multicast.

The Leaf switches have the following configuration to enable multicast:

ip pim rp-address 192.0.2.255 group-list 224.0.0.0/4

interface nve1

source-interface loopback1

member vni 1000

mcast-group 239.0.0.1

interface Ethernet1/1

no switchport

mtu 9216

medium p2p

ip address 198.51.100.1/31

ip ospf network point-to-point

no ip ospf passive-interface

ip router ospf UNDERLAY area 0.0.0.0

ip pim sparse-mode

no shutdown

interface Ethernet1/2

no switchport

mtu 9216

medium p2p

ip address 198.51.100.9/31

ip ospf network point-to-point

ip router ospf UNDERLAY area 0.0.0.0

ip pim sparse-mode

no shutdown

Let’s first verify that the Leaf has PIM neighbors and is aware of the RP:

Leaf1# show ip pim nei

PIM Neighbor Status for VRF "default"

Neighbor Interface Uptime Expires DR Bidir- BFD ECMP Redirect

Priority Capable State Capable

198.51.100.0 Ethernet1/1 3d21h 00:01:19 1 yes n/a no

198.51.100.8 Ethernet1/2 3d22h 00:01:31 1 yes n/a no

Leaf1# show ip pim rp vrf default

PIM RP Status Information for VRF "default"

BSR disabled

Auto-RP disabled

BSR RP Candidate policy: None

BSR RP policy: None

Auto-RP Announce policy: None

Auto-RP Discovery policy: None

RP: 192.0.2.255, (0),

uptime: 3d22h priority: 255,

RP-source: (local),

group ranges:

224.0.0.0/4

Let’s also verify that the Spines are aware of each other as RPs:

Spine1# show ip pim rp vrf default PIM RP Status Information for VRF "default" BSR disabled Auto-RP disabled BSR RP Candidate policy: None BSR RP policy: None Auto-RP Announce policy: None Auto-RP Discovery policy: None Anycast-RP 192.0.2.255 members: 192.0.2.1* 192.0.2.2 RP: 192.0.2.255*, (0), uptime: 10w0d priority: 255, RP-source: (local), group ranges: 224.0.0.0/4

That is all looking as expected.

Currently, Leafs have their NVE interface shutdown to enable us to do packet captures for the entire interaction. I will enable packet capture in Wireshark on my sniffer to capture the PIM packets.

Currently Leaf1 has no outgoing interface for 239.0.0.1:

Leaf1# show ip mroute IP Multicast Routing Table for VRF "default" (*, 232.0.0.0/8), uptime: 3d22h, pim ip Incoming interface: Null, RPF nbr: 0.0.0.0 Outgoing interface list: (count: 0)

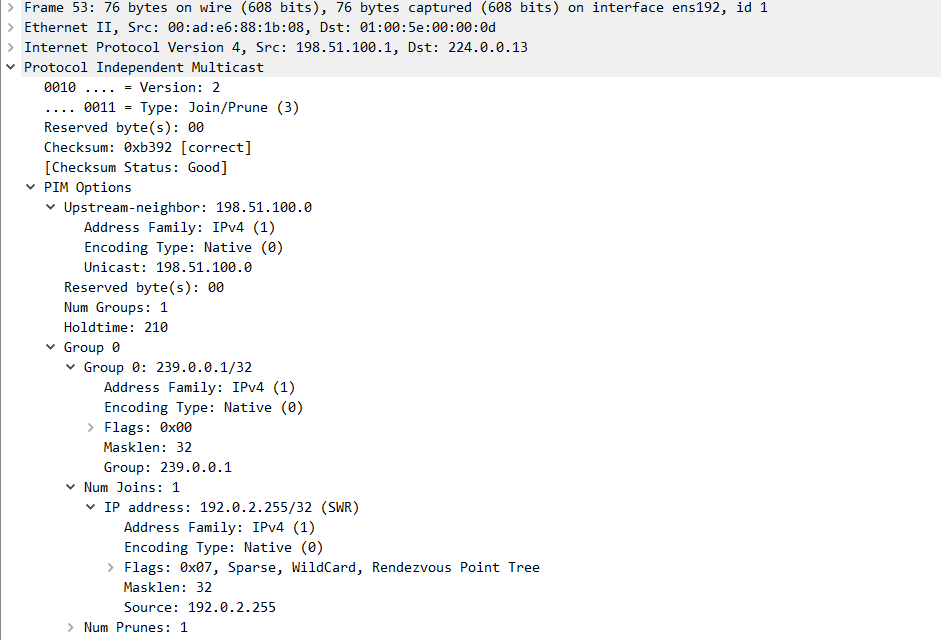

The NVE interface is brought up and Leaf1 sends a PIM Join towards the RP:

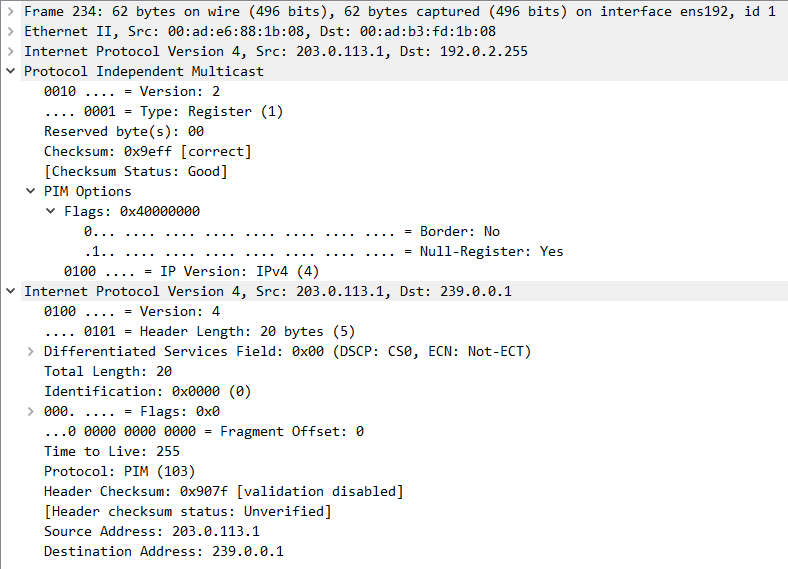

Leaf1 also sends a PIM Register:

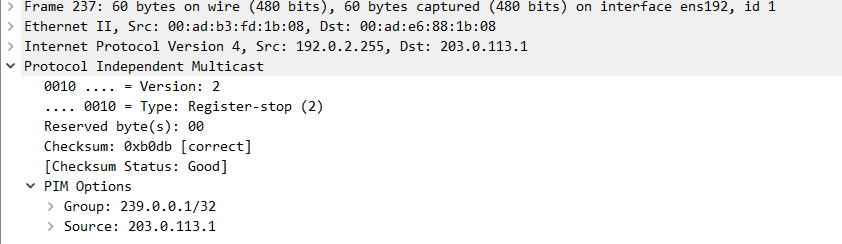

The RP (Spine1) then sends a PIM Register Stop:

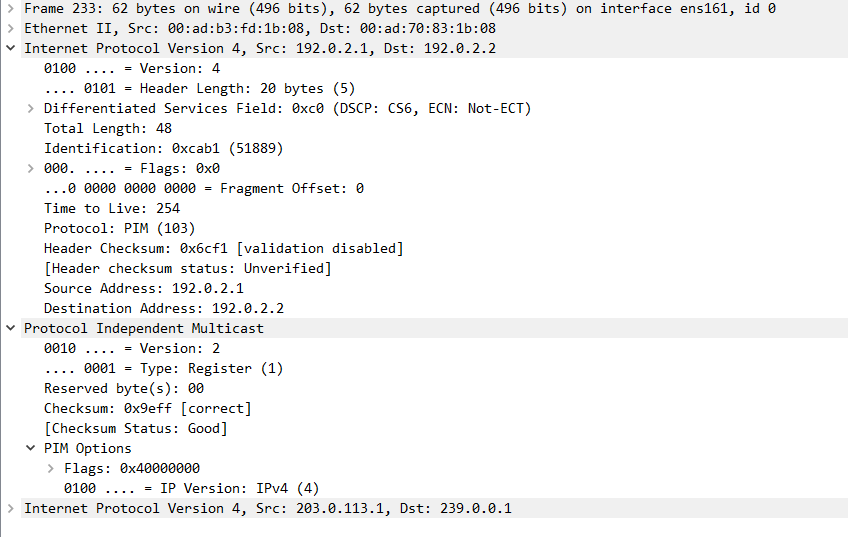

Spine1 also forwards the PIM Register to Spine2 as they are part of the same Anycast RP set:

At this point, Leaf1 is both on the shared and the source tree:

Leaf1# show ip mroute 239.0.0.1

IP Multicast Routing Table for VRF "default"

(*, 239.0.0.1/32), uptime: 00:27:06, nve ip pim

Incoming interface: Ethernet1/1, RPF nbr: 198.51.100.0

Outgoing interface list: (count: 1)

nve1, uptime: 00:27:06, nve

(203.0.113.1/32, 239.0.0.1/32), uptime: 00:27:06, nve mrib ip pim

Incoming interface: loopback1, RPF nbr: 203.0.113.1

Outgoing interface list: (count: 1)

Ethernet1/1, uptime: 00:26:08, pim

For the shared tree, the incoming interface is Eth1/1 which is the interface towards the Spine and the outgoing interface is the VXLAN interface. For the source tree, the source is loopback1 which is the source interface for the NVE and the outgoing interface is Eth1/1 towards Spine1.

The RP is also aware of Leaf1:

Spine1# show ip mroute 239.0.0.1

IP Multicast Routing Table for VRF "default"

(*, 239.0.0.1/32), uptime: 00:30:17, pim ip

Incoming interface: loopback1, RPF nbr: 192.0.2.255

Outgoing interface list: (count: 1)

Ethernet1/1, uptime: 00:30:17, pim

(203.0.113.1/32, 239.0.0.1/32), uptime: 00:29:20, pim mrib ip

Incoming interface: Ethernet1/1, RPF nbr: 198.51.100.1, internal

Outgoing interface list: (count: 1)

Ethernet1/1, uptime: 00:26:20, pim, (RPF)

Now Leaf4 will have its NVE brought up and we will verify the mroutes again on the Spines:

Spine1# show ip mroute 239.0.0.1

IP Multicast Routing Table for VRF "default"

(*, 239.0.0.1/32), uptime: 00:37:00, pim ip

Incoming interface: loopback1, RPF nbr: 192.0.2.255

Outgoing interface list: (count: 1)

Ethernet1/1, uptime: 00:37:00, pim

(203.0.113.1/32, 239.0.0.1/32), uptime: 00:36:03, pim mrib ip

Incoming interface: Ethernet1/1, RPF nbr: 198.51.100.1, internal

Outgoing interface list: (count: 1)

Ethernet1/1, uptime: 00:33:03, pim, (RPF)

(203.0.113.4/32, 239.0.0.1/32), uptime: 00:04:32, pim mrib ip

Incoming interface: Ethernet1/4, RPF nbr: 198.51.100.7, internal

Outgoing interface list: (count: 1)

Ethernet1/1, uptime: 00:04:32, pim

Spine2# show ip mroute 239.0.0.1

IP Multicast Routing Table for VRF "default"

(*, 239.0.0.1/32), uptime: 00:02:08, pim ip

Incoming interface: loopback1, RPF nbr: 192.0.2.255

Outgoing interface list: (count: 1)

Ethernet1/4, uptime: 00:02:08, pim

(203.0.113.1/32, 239.0.0.1/32), uptime: 00:33:04, pim ip

Incoming interface: Ethernet1/1, RPF nbr: 198.51.100.9, internal

Outgoing interface list: (count: 1)

Ethernet1/4, uptime: 00:02:08, pim

(203.0.113.4/32, 239.0.0.1/32), uptime: 00:01:33, pim mrib ip

Incoming interface: Ethernet1/4, RPF nbr: 198.51.100.15, internal

Outgoing interface list: (count: 0)

Now let’s clear the ARP cache on Server1 (198.51.100.11) and ping Server4 (198.51.100.44):

cisco@server1:~$ sudo ip neighbor flush dev ens160 cisco@server1:~$ ping 198.51.100.44 PING 198.51.100.44 (198.51.100.44) 56(84) bytes of data. 64 bytes from 198.51.100.44: icmp_seq=1 ttl=64 time=9.46 ms 64 bytes from 198.51.100.44: icmp_seq=2 ttl=64 time=5.03 ms 64 bytes from 198.51.100.44: icmp_seq=3 ttl=64 time=4.19 ms 64 bytes from 198.51.100.44: icmp_seq=4 ttl=64 time=4.35 ms

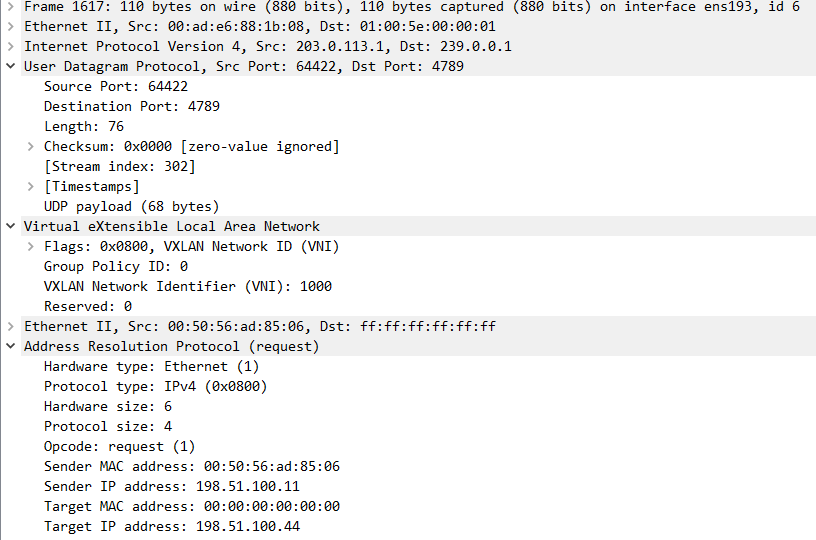

Server1 sends ARP request that is a broadcast frame as the MAC address of Server4 is unknown:

Note that the frame is sent to 239.0.0.1 using multicast but that it is VXLAN encapsulated. The destination port is 4789 (VXLAN) and the source port is determined based on an algorithm.

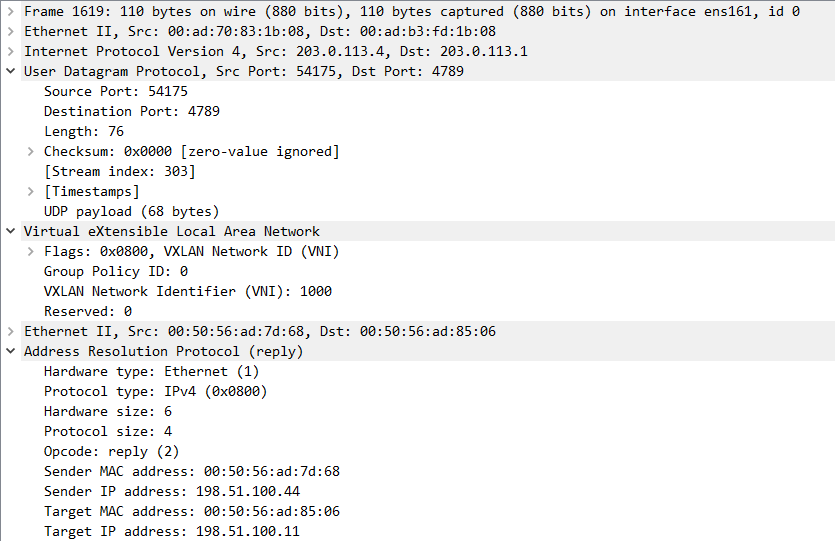

Note that when the ARP response comes back, Server4 has learned the MAC address of Server1 so there is no need for a broadcast. The ARP response is unicast and is also sent as unicast between Leaf4 and Leaf1:

There you have it. VXLAN with flood and learn using multicast in the underlay for BUM frames. In another post we will take a look at ingress replication. See you next time!

Hi Daniel,

Thanks a lot for your great content. I have been following all your posts on VxLAN and they were very helpful. I have a small question, it is kind of about multicast though, not necessarily VxLAN. Since the PIM join packet’s destination is 224.0.0.13 , If there are multiple PIM enabled upstream router interfaces from Last Hop Router, how does it decide the way to RP in PIM-SM. ( I can see under PIM options there’s upstream neighbor address is mentioned ). Does the LHR look for the unicast routing table for RP IP and include it in the PIM join so that the packet is guided his way to RP.

I looked this on several resources and couldn’t find it; even in the ENCOR OCG.

Hi,

Unicast RIB is used to find where to send packet towards RP.

Hi Daniel,

Great post! It really got me thinking further.

I have a question about the multicast group configuration in a large VxLAN fabric. In our setup, all VNIs are configured with the same multicast group 239.0.0.1, as shown below:

interface nve1

…

member vni 10010

mcast-group 239.0.0.1

member vni 10011

mcast-group 239.0.0.1

member vni 10012

mcast-group 239.0.0.1

member vni 10013

mcast-group 239.0.0.1

…

I’ve noticed that many online configuration examples also use the same multicast group for multiple VNIs. While this approach simplifies the configuration, it raises a few questions:

Does this mean that all VTEPs have to join the same ASM multicast tree?

When each VTEP sends a PIM register to the RP with its own VTEP IP as the source IP and the same group 239.0.0.1 as the target group, how do other VTEPs choose which SSM tree to join? Or do they just join all SSM shared trees?

Regarding BUM traffic, would every VTEP receive the BUM packets and then have to check if it has the corresponding VNI configured?

Thanks,

John

Hi John!

Thanks!

I haven’t tested this in detail, but I believe this is how it should work:

Yes, all VTEPs will join the shared tree. This would be the case also if you have unique multicast group per VNI. The only difference is that you have multiple VNIs sharing the same shared tree.

The VTEPs will send PIM Register which will create the source tree (not SSM, that is different mode of multicast). In PIM ASM, moving from shared tree to source tree is initiated by receiving traffic from a source to that group. For example, if a VTEP that has NVE of 10.0.0.1 encapsulates ARP request into VXLAN packet and sends it to 239.0.0.1, all the other VTEPs that receive this packet (on the shared tree) will then request to join the source tree (S, G) of 10.0.0.1, 239.0.0.1.

With this overlapping of VNI to multicast group, BUM frames are sent to all the VTEPs and they would have to drop the frame if the VNI does not exist locally. Some bandwidth will be wasted, but this is probably acceptable as it shouldn’t affect the performance in a high speed network which a VXLAN-network typically is.