In some scenarios it is really useful to be able to simulate a WAN in regards to latency, jitter, and packet loss. Especially for those of us that work with SD-WAN and want to test or policies in a controlled environment. In this post I will describe how I build a WAN impairment device in Linux for a VMware vSphere environment and how I can simulate different conditions.

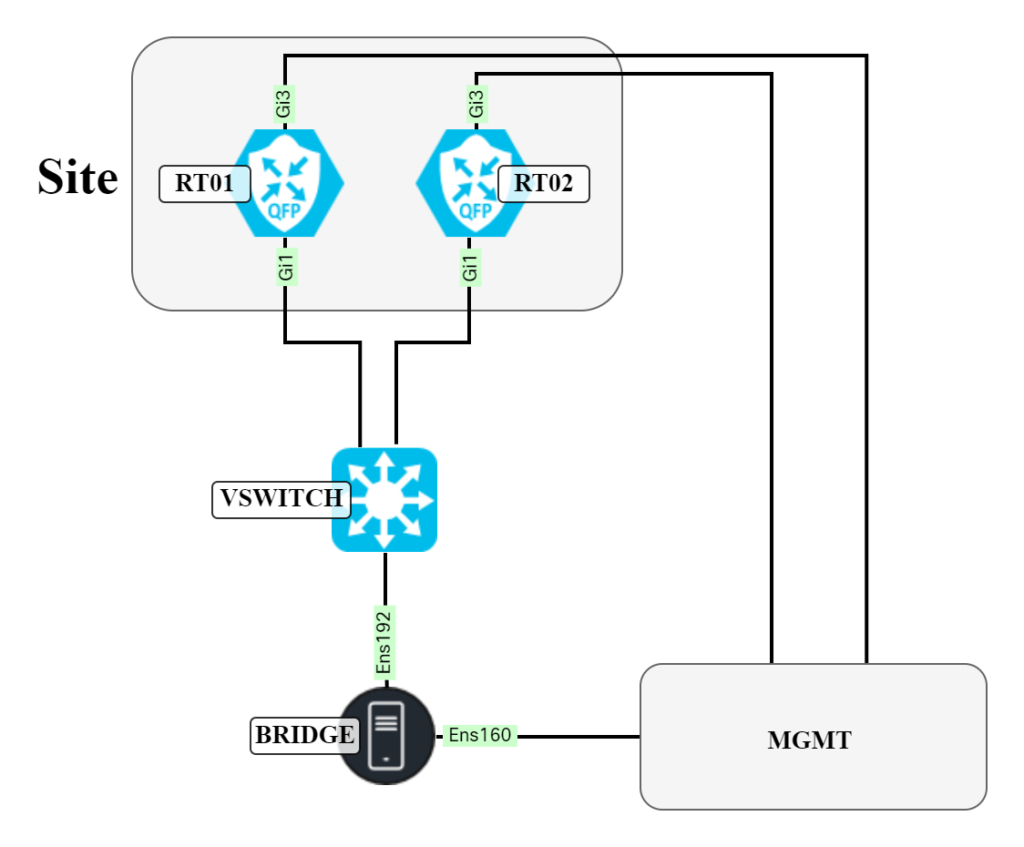

My SD-WAN lab is built on VMware vSphere using Catalyst SD-WAN with Catalyst8000v as virtual routers and on-premises controllers. The goal with the WAN impairment device is to be able to manipulate each internet connection to a router individually. That way I can simulate that a particular connection or router is having issues while other connections/routers are not. I don’t want to impose the same conditions on all connections/devices simultaneously. To do this, I have built a physical topology that looks like this:

All devices are connected to a management network that I can access via a VPN. This way I have “out of band” access to all devices and can use SSH to configure my routers with a bootstrap configuration. To avoid having to create many unique VLANs in the vSwitch, a network using VLAN 4095 has been created which allows for many devices to connect to the same network but simulate a point to point connection by tagging using different VLANs. This network can be seen below:

For example, VLAN 542 connects to one router at a site and VLAN 544 connects to the other router at that site. This is implemented on the Linux device using subinterfaces. My choice of Linux host is a Ubuntu device (22.04.2 LTS):

daniel@bridge:~$ uname -a Linux bridge 5.19.0-45-generic #46~22.04.1-Ubuntu SMP PREEMPT_DYNAMIC Wed Jun 7 15:06:04 UTC 20 x86_64 x86_64 x86_64 GNU/Linux

This should work on other Linux distributions as well.

To create subinterfaces needed, the IP utility is used. First check that the 802.1Q module has been loaded:

daniel@bridge:~$ modinfo 8021q

filename: /lib/modules/5.19.0-45-generic/kernel/net/8021q/8021q.ko

version: 1.8

license: GPL

alias: rtnl-link-vlan

srcversion: 71693C415A70693A9C193B7

depends: mrp,garp

retpoline: Y

intree: Y

name: 8021q

vermagic: 5.19.0-45-generic SMP preempt mod_unload modversions

sig_id: PKCS#7

signer: Build time autogenerated kernel key

sig_key: 30:BB:A7:54:11:69:AD:F5:53:2E:FB:16:58:C1:A2:40:26:50:61:53

sig_hashalgo: sha512

signature: A5:01:09:AA:CA:9A:27:2C:3C:F3:F4:1D:1D:FA:5F:21:A7:57:D5:15:

BA:D0:FE:11:9C:12:19:4A:C2:C1:96:F3:60:E5:A7:E6:76:2A:BC:E3:

3D:77:66:D0:67:35:CF:05:86:40:20:81:B1:D6:78:E9:94:FB:F1:37:

81:7B:1C:66:3B:38:4D:27:C8:56:3F:56:FC:45:3D:28:18:61:AA:92:

82:DF:1B:D9:CA:82:1E:15:AD:E0:FC:47:D8:C4:34:13:20:2D:43:25:

1F:95:75:CB:F9:0B:AD:8D:32:A0:3A:4C:45:FF:1E:32:72:4C:02:A1:

64:0C:B7:7E:EC:67:E9:6B:13:89:E2:58:87:DE:BA:5B:35:4B:9E:28:

A6:48:FD:B0:F4:09:02:43:24:3E:24:60:31:1D:0D:7F:17:F6:CD:E4:

9A:E5:02:2D:2D:FA:DE:77:1A:62:CA:80:7F:3C:09:B2:5E:F8:33:95:

A4:A4:EF:78:B2:A6:77:2A:7B:DF:84:14:22:36:FA:72:9D:AB:71:AE:

07:88:55:C2:05:80:CF:F4:96:CE:67:9F:7B:25:0A:70:8C:E3:04:AA:

F8:98:65:76:0A:5A:BB:D7:1A:CB:52:3C:73:40:2A:38:CF:34:3C:38:

B6:9A:C5:9F:60:6C:07:1A:65:87:88:5A:57:39:7F:77:3A:D9:42:71:

7F:4F:F5:BB:B4:F9:33:5C:5C:AA:95:02:F3:EA:E9:24:68:35:0F:70:

32:94:8C:5C:E3:31:C7:7B:F3:09:77:45:CF:91:C9:7B:CB:4E:9F:33:

5A:50:F3:6C:EA:20:CB:A9:D9:08:E2:A7:95:B9:AD:71:FD:FC:37:90:

E8:7D:C6:EF:4B:D0:4C:26:EB:E1:1C:20:88:01:6A:02:8D:47:90:B8:

36:EF:6D:C9:D8:53:72:F0:8F:FC:CD:01:D0:70:4C:6D:19:2E:A0:32:

91:A5:68:6A:F0:08:E9:2E:82:BC:53:DA:23:32:88:21:CB:C5:18:6E:

0A:8E:63:9F:33:C2:C5:75:FC:53:22:BA:60:68:57:C0:C1:BC:DF:94:

4B:05:20:DE:C6:D7:B6:7F:C4:90:80:D6:72:21:74:F8:C9:C3:56:8A:

DD:C7:5E:DE:4D:F0:6B:B2:03:06:34:53:16:3D:B1:32:C0:7E:24:4B:

0C:9F:97:02:CB:71:B9:43:D6:2B:41:31:B0:24:78:A8:FD:F3:94:BD:

2D:97:44:15:80:67:C0:1A:3B:6E:A1:0B:62:CE:87:82:AE:91:F5:61:

9C:38:E6:BB:8B:A4:63:A3:7F:DB:1D:D5:EE:B7:F2:F8:95:E5:BE:FC:

BB:5E:36:EC:45:73:53:E7:AE:69:4A:31

If the module for some reason has not been installed or loaded into the kernel use sudo apt-get install vlan and sudo modprobe 8021q to install and load the module into the kernel.

The next step is to start adding interfaces using the IP utility. Add the interface:

sudo ip link add link ens192 name ens192.542 type vlan id 542

The ip link add link ens192 defines what interface to add the VLAN to. Then it is given a name and finally the VLAN is defined. Then set the interface to be up:

sudo ip link set dev ens192.542 up

Then add an IP address to the interface:

daniel@bridge:~$ sudo ip addr add 192.0.2.57/30 dev ens192.542

To view information about the interface, use the ip -d link show command:

daniel@bridge:~$ ip -d link show ens192.542

13: ens192.542@ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 00:50:56:ad:3a:52 brd ff:ff:ff:ff:ff:ff promiscuity 0 minmtu 0 maxmtu 65535

vlan protocol 802.1Q id 542 <REORDER_HDR> addrgenmode eui64 numtxqueues 1 numrxqueues 1 gso_max_size 65536 gso_max_segs 65535

Notice the VLAN protocol and ID.

When done, there will be a lot of subinterfaces on the device:

daniel@bridge:~$ ip link show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP mode DEFAULT group default qlen 1000

link/ether 00:50:56:ad:79:43 brd ff:ff:ff:ff:ff:ff

altname enp3s0

3: ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP mode DEFAULT group default qlen 1000

link/ether 00:50:56:ad:3a:52 brd ff:ff:ff:ff:ff:ff

altname enp11s0

4: ens224: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP mode DEFAULT group default qlen 1000

link/ether 00:50:56:ad:ae:52 brd ff:ff:ff:ff:ff:ff

altname enp19s0

13: ens192.542@ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 00:50:56:ad:3a:52 brd ff:ff:ff:ff:ff:ff

14: ens224.543@ens224: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 00:50:56:ad:ae:52 brd ff:ff:ff:ff:ff:ff

15: ens192.520@ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 00:50:56:ad:3a:52 brd ff:ff:ff:ff:ff:ff

16: ens192.522@ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 00:50:56:ad:3a:52 brd ff:ff:ff:ff:ff:ff

17: ens192.524@ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 00:50:56:ad:3a:52 brd ff:ff:ff:ff:ff:ff

18: ens192.526@ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 00:50:56:ad:3a:52 brd ff:ff:ff:ff:ff:ff

19: ens192.528@ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 00:50:56:ad:3a:52 brd ff:ff:ff:ff:ff:ff

20: ens192.530@ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 00:50:56:ad:3a:52 brd ff:ff:ff:ff:ff:ff

21: ens192.532@ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 00:50:56:ad:3a:52 brd ff:ff:ff:ff:ff:ff

22: ens192.534@ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 00:50:56:ad:3a:52 brd ff:ff:ff:ff:ff:ff

23: ens192.536@ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 00:50:56:ad:3a:52 brd ff:ff:ff:ff:ff:ff

24: ens192.538@ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 00:50:56:ad:3a:52 brd ff:ff:ff:ff:ff:ff

25: ens192.540@ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 00:50:56:ad:3a:52 brd ff:ff:ff:ff:ff:ff

26: ens192.541@ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 00:50:56:ad:3a:52 brd ff:ff:ff:ff:ff:ff

27: ens192.544@ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 00:50:56:ad:3a:52 brd ff:ff:ff:ff:ff:ff

The Linux device should be used as a router, meaning that it routes packet between its interfaces. This will not be done by default as ip_forward is set to 0. First, verify what ip_forward is set to:

daniel@bridge:~$ sysctl net.ipv4.ip_forward net.ipv4.ip_forward = 0

Then set it to 1 to make it route packets:

daniel@bridge:~$ sudo sysctl -w net.ipv4.ip_forward=1 net.ipv4.ip_forward = 1

The device will now route packets between interfaces.

It is now time to install tcconfig, a Python project that uses tc (traffic control) on Linux to modify how an interface is behaving in regards to latency, jitter, packet loss, and throughput:

daniel@bridge:~$ sudo pip3 install tcconfig

Collecting tcconfig

Downloading tcconfig-0.28.0-py3-none-any.whl (52 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 52.1/52.1 KB 2.1 MB/s eta 0:00:00

Collecting docker<7,>=1.9.0

Downloading docker-6.1.3-py3-none-any.whl (148 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 148.1/148.1 KB 10.5 MB/s eta 0:00:00

Collecting path<17,>=13

Downloading path-16.6.0-py3-none-any.whl (26 kB)

Collecting voluptuous<1

Downloading voluptuous-0.13.1-py3-none-any.whl (29 kB)

Collecting loguru<1,>=0.4.1

Downloading loguru-0.7.0-py3-none-any.whl (59 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 60.0/60.0 KB 14.4 MB/s eta 0:00:00

Requirement already satisfied: pyparsing<4,>=2.0.3 in /usr/lib/python3/dist-packages (from tcconfig) (2.4.7)

Collecting pyroute2<1,>=0.6.1

Downloading pyroute2-0.7.9-py3-none-any.whl (456 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 456.5/456.5 KB 16.9 MB/s eta 0:00:00

Collecting subprocrunner<3,>=1.2.1

Downloading subprocrunner-2.0.0-py3-none-any.whl (10 kB)

Collecting humanreadable<1,>=0.1.0

Downloading humanreadable-0.3.0-py3-none-any.whl (30 kB)

Collecting typepy<2,>=1.1.1

Downloading typepy-1.3.1-py3-none-any.whl (31 kB)

Collecting msgfy<1,>=0.1.0

Downloading msgfy-0.2.0-py3-none-any.whl (4.3 kB)

Collecting SimpleSQLite<2,>=1.1.1

Downloading SimpleSQLite-1.3.2-py3-none-any.whl (32 kB)

Collecting DataProperty<2,>=0.51.0

Downloading DataProperty-1.0.0-py3-none-any.whl (27 kB)

Collecting mbstrdecoder<2,>=1.0.0

Downloading mbstrdecoder-1.1.3-py3-none-any.whl (7.8 kB)

Collecting websocket-client>=0.32.0

Downloading websocket_client-1.6.1-py3-none-any.whl (56 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 56.9/56.9 KB 16.3 MB/s eta 0:00:00

Requirement already satisfied: urllib3>=1.26.0 in /usr/lib/python3/dist-packages (from docker<7,>=1.9.0->tcconfig) (1.26.5)

Collecting packaging>=14.0

Downloading packaging-23.1-py3-none-any.whl (48 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 48.9/48.9 KB 12.8 MB/s eta 0:00:00

Collecting requests>=2.26.0

Downloading requests-2.31.0-py3-none-any.whl (62 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 62.6/62.6 KB 17.1 MB/s eta 0:00:00

Collecting tabledata<2,>=1.1.3

Downloading tabledata-1.3.1-py3-none-any.whl (11 kB)

Collecting sqliteschema<2,>=1.2.1

Downloading sqliteschema-1.3.0-py3-none-any.whl (12 kB)

Collecting pathvalidate<4,>=2.5.2

Downloading pathvalidate-3.0.0-py3-none-any.whl (21 kB)

Requirement already satisfied: chardet<6,>=3.0.4 in /usr/lib/python3/dist-packages (from mbstrdecoder<2,>=1.0.0->DataProperty<2,>=0.51.0->tcconfig) (4.0.0)

Requirement already satisfied: idna<4,>=2.5 in /usr/lib/python3/dist-packages (from requests>=2.26.0->docker<7,>=1.9.0->tcconfig) (3.3)

Collecting charset-normalizer<4,>=2

Downloading charset_normalizer-3.1.0-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (199 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 199.3/199.3 KB 16.1 MB/s eta 0:00:00

Requirement already satisfied: certifi>=2017.4.17 in /usr/lib/python3/dist-packages (from requests>=2.26.0->docker<7,>=1.9.0->tcconfig) (2020.6.20)

Requirement already satisfied: pytz>=2018.9 in /usr/lib/python3/dist-packages (from typepy<2,>=1.1.1->tcconfig) (2022.1)

Requirement already satisfied: python-dateutil<3.0.0,>=2.8.0 in /usr/lib/python3/dist-packages (from typepy<2,>=1.1.1->tcconfig) (2.8.1)

Installing collected packages: voluptuous, pyroute2, websocket-client, pathvalidate, path, packaging, msgfy, mbstrdecoder, loguru, charset-normalizer, typepy, subprocrunner, requests, humanreadable, docker, DataProperty, tabledata, sqliteschema, SimpleSQLite, tcconfig

Attempting uninstall: requests

Found existing installation: requests 2.25.1

Not uninstalling requests at /usr/lib/python3/dist-packages, outside environment /usr

Can't uninstall 'requests'. No files were found to uninstall.

Successfully installed DataProperty-1.0.0 SimpleSQLite-1.3.2 charset-normalizer-3.1.0 docker-6.1.3 humanreadable-0.3.0 loguru-0.7.0 mbstrdecoder-1.1.3 msgfy-0.2.0 packaging-23.1 path-16.6.0 pathvalidate-3.0.0 pyroute2-0.7.9 requests-2.31.0 sqliteschema-1.3.0 subprocrunner-2.0.0 tabledata-1.3.1 tcconfig-0.28.0 typepy-1.3.1 voluptuous-0.13.1 websocket-client-1.6.1

To verify it has been installed, use the tcshow command:

daniel@bridge:~$ tcshow ens192.542

{

"ens192.542": {

"outgoing": {},

"incoming": {}

}

}

Nothing is currently applied to the interface. Let’s try a ping towards one of the devices to see what the latency is:

daniel@bridge:~$ ping 192.0.2.58 -c 5 PING 192.0.2.58 (192.0.2.58) 56(84) bytes of data. 64 bytes from 192.0.2.58: icmp_seq=1 ttl=255 time=0.367 ms 64 bytes from 192.0.2.58: icmp_seq=2 ttl=255 time=0.295 ms 64 bytes from 192.0.2.58: icmp_seq=3 ttl=255 time=0.219 ms 64 bytes from 192.0.2.58: icmp_seq=4 ttl=255 time=0.241 ms 64 bytes from 192.0.2.58: icmp_seq=5 ttl=255 time=0.301 ms --- 192.0.2.58 ping statistics --- 5 packets transmitted, 5 received, 0% packet loss, time 4095ms rtt min/avg/max/mdev = 0.219/0.284/0.367/0.051 ms

The latency is low as expected as these hosts are in the same virtual network. Now let’s add some latency to the interface to make it look like the two devices are on a WAN in different continents. Let’s add 150ms of latency using the tcset command:

daniel@bridge:~$ sudo tcset --device ens192.542 --delay 150

Let’s try the ping again:

daniel@bridge:~$ ping 192.0.2.58 -c 5 PING 192.0.2.58 (192.0.2.58) 56(84) bytes of data. 64 bytes from 192.0.2.58: icmp_seq=1 ttl=255 time=150 ms 64 bytes from 192.0.2.58: icmp_seq=2 ttl=255 time=150 ms 64 bytes from 192.0.2.58: icmp_seq=3 ttl=255 time=150 ms 64 bytes from 192.0.2.58: icmp_seq=4 ttl=255 time=150 ms 64 bytes from 192.0.2.58: icmp_seq=5 ttl=255 time=150 ms --- 192.0.2.58 ping statistics --- 5 packets transmitted, 5 received, 0% packet loss, time 4005ms rtt min/avg/max/mdev = 150.203/150.280/150.316/0.042 ms

The latency is now 150ms and this can also be verified with the tcshow command:

daniel@bridge:~$ tcshow ens192.542

{

"ens192.542": {

"outgoing": {

"protocol=ip": {

"filter_id": "800::800",

"delay": "150ms",

"rate": "10Gbps"

}

},

"incoming": {}

}

}

To remove the added latency, use the tcdel command:

daniel@bridge:~$ sudo tcdel ens192.542 --all [INFO] delete ens192.542 qdisc [INFO] no qdisc to delete for the incoming device. [INFO] delete ens192.542 ingress qdisc

The latency is now back to normal:

daniel@bridge:~$ ping 192.0.2.58 -c 5 PING 192.0.2.58 (192.0.2.58) 56(84) bytes of data. 64 bytes from 192.0.2.58: icmp_seq=1 ttl=255 time=0.228 ms 64 bytes from 192.0.2.58: icmp_seq=2 ttl=255 time=0.326 ms 64 bytes from 192.0.2.58: icmp_seq=3 ttl=255 time=0.283 ms 64 bytes from 192.0.2.58: icmp_seq=4 ttl=255 time=0.248 ms 64 bytes from 192.0.2.58: icmp_seq=5 ttl=255 time=0.281 ms --- 192.0.2.58 ping statistics --- 5 packets transmitted, 5 received, 0% packet loss, time 4090ms rtt min/avg/max/mdev = 0.228/0.273/0.326/0.033 ms

What if we want to simulate packet loss? That is possible as well. Let’s try simulating a 50% packet loss:

daniel@bridge:~$ sudo tcset --device ens192.542 --loss 50%

Let’s try the ping again:

daniel@bridge:~$ ping 192.0.2.58 -c 10 PING 192.0.2.58 (192.0.2.58) 56(84) bytes of data. 64 bytes from 192.0.2.58: icmp_seq=1 ttl=255 time=0.227 ms 64 bytes from 192.0.2.58: icmp_seq=3 ttl=255 time=0.314 ms 64 bytes from 192.0.2.58: icmp_seq=4 ttl=255 time=0.272 ms 64 bytes from 192.0.2.58: icmp_seq=6 ttl=255 time=0.314 ms 64 bytes from 192.0.2.58: icmp_seq=7 ttl=255 time=0.308 ms 64 bytes from 192.0.2.58: icmp_seq=8 ttl=255 time=0.267 ms 64 bytes from 192.0.2.58: icmp_seq=10 ttl=255 time=0.190 ms --- 192.0.2.58 ping statistics --- 10 packets transmitted, 7 received, 30% packet loss, time 9214ms rtt min/avg/max/mdev = 0.190/0.270/0.314/0.044 ms

There was now severe packet loss. With modifying latency we could see exactly the added latency while with packet loss it’s more difficult to see exactly how much loss you have. This should be more clear when using a protocol such as BFD to measure loss.

It’s also possible to get even more advanced by only adding delay if traffic comes from certain networks. To demonstrate this, I will ping using different source IPs. Let’s add delay if packet is coming from 192.0.2.40/29 (direction needs to be incoming):

daniel@bridge:~$ sudo tcset --device ens192.542 --delay 150 --network 192.0.2.40/29 --direction incoming

First let’s try our standard ping:

daniel@bridge:~$ ping 192.0.2.58 -c 5 PING 192.0.2.58 (192.0.2.58) 56(84) bytes of data. 64 bytes from 192.0.2.58: icmp_seq=1 ttl=255 time=0.292 ms 64 bytes from 192.0.2.58: icmp_seq=2 ttl=255 time=0.318 ms 64 bytes from 192.0.2.58: icmp_seq=3 ttl=255 time=0.262 ms 64 bytes from 192.0.2.58: icmp_seq=4 ttl=255 time=0.329 ms 64 bytes from 192.0.2.58: icmp_seq=5 ttl=255 time=0.366 ms --- 192.0.2.58 ping statistics --- 5 packets transmitted, 5 received, 0% packet loss, time 4073ms rtt min/avg/max/mdev = 0.262/0.313/0.366/0.035 ms

Then let’s try a ping where packet is sourced from 192.0.2.41:

daniel@bridge:~$ ping 192.0.2.58 -c 5 -I 192.0.2.41 PING 192.0.2.58 (192.0.2.58) from 192.0.2.41 : 56(84) bytes of data. 64 bytes from 192.0.2.58: icmp_seq=1 ttl=255 time=150 ms 64 bytes from 192.0.2.58: icmp_seq=2 ttl=255 time=150 ms 64 bytes from 192.0.2.58: icmp_seq=3 ttl=255 time=150 ms 64 bytes from 192.0.2.58: icmp_seq=4 ttl=255 time=150 ms 64 bytes from 192.0.2.58: icmp_seq=5 ttl=255 time=150 ms --- 192.0.2.58 ping statistics --- 5 packets transmitted, 5 received, 0% packet loss, time 4006ms rtt min/avg/max/mdev = 150.315/150.340/150.363/0.017 ms

This can be used to simulate poor performance between two specific sites. This sometimes happens in real WANs.

Building a WAN impairment device is simple using Linux and tcconfig. I hope this post has demonstrated how to easily insert a WAN impairment device into a VMware vSphere environment. All the concepts can apply to other environments as well, though. Happy building!

Good article. I use EVE-NG for SD-WAN tests, with NETem I can easily set loss, jitter, latency, it is much easier for me.

Very nice. Thanks for sharing.

Thanks!