As a follow-up to the post yesterday on native VLANs, there was a question on what would happen to 802.1Q-tagged frames traversing an unmanaged switch. Unmanaged in this case being a switch that does not support VLANs. While this might be more of a theoretical question today, it’s still interesting to dive into it to better understand how a 802.1Q-tagged frame is different from an untagged frame.

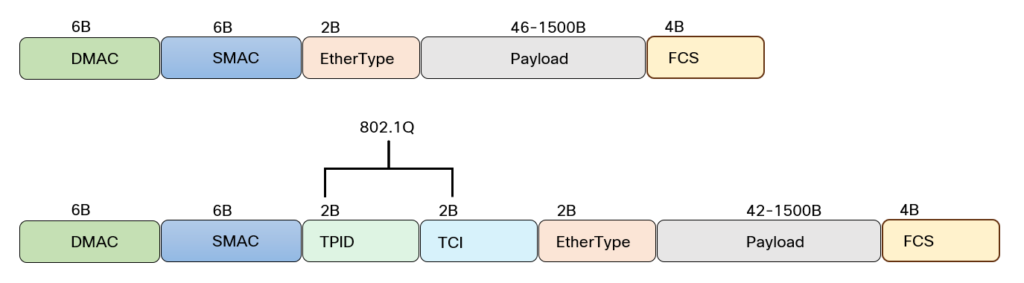

Before we can answer the question on what a VLAN-unaware switch should do, let’s refresh our memory on the Ethernet header. The Ethernet frame consists of Destination MAC, Source MAC, Ethertype, and FCS. 802.1Q adds an additional four bytes consisting of Tag Protocol Identifier (TPID) and Tag Control Information (TCI). This is shown below:

Note how the TPID in the tagged frame is in the place of EtherType for untagged frames. It’s also a 2-byte field and the TPID is set to 0x8100 for tagged frames. The EtherType field is still there and would be for example 0x0800 for IPv4 payload.

To demonstrate what this looks like on the wire, I’ve setup two routers with the following configuration:

hostname R1 ! vrf definition ETHERNET ! address-family ipv4 exit-address-family ! interface GigabitEthernet1.100 encapsulation dot1Q 100 native ip address 10.0.0.1 255.255.255.252 ! interface GigabitEthernet1.200 encapsulation dot1Q 200 vrf forwarding ETHERNET ip address 10.0.0.1 255.255.255.252

The other side is identical, except for the IP of 10.0.0.2. I added a VRF to be able to use the same IP addresses on both interfaces. Note that one subinterface uses untagged frames and the other tagged frames. I then initiated a ping from 10.0.0.2 to 10.0.0.1. For VLAN 100, the untagged frame is shown below:

Frame 11: 114 bytes on wire (912 bits), 114 bytes captured (912 bits)

Ethernet II, Src: 52:54:00:0e:3a:34, Dst: 52:54:00:0a:78:a2

Destination: 52:54:00:0a:78:a2

Source: 52:54:00:0e:3a:34

Type: IPv4 (0x0800)

Internet Protocol Version 4, Src: 10.0.0.2, Dst: 10.0.0.1

Internet Control Message Protocol

Now let’s compare this to the tagged frame:

Frame 21: 118 bytes on wire (944 bits), 118 bytes captured (944 bits)

Ethernet II, Src: 52:54:00:0e:3a:34, Dst: 52:54:00:0a:78:a2

Destination: 52:54:00:0a:78:a2

Source: 52:54:00:0e:3a:34

Type: 802.1Q Virtual LAN (0x8100)

802.1Q Virtual LAN, PRI: 0, DEI: 0, ID: 200

000. .... .... .... = Priority: Best Effort (default) (0)

...0 .... .... .... = DEI: Ineligible

.... 0000 1100 1000 = ID: 200

Type: IPv4 (0x0800)

Internet Protocol Version 4, Src: 10.0.0.2, Dst: 10.0.0.1

Internet Control Message Protocol

It’s a little confusing how Wireshark is displaying this, but the TPID is set to 0x8100 and the EtherType is set to 0x0800. If we look at an ARP frame we’ll see that the EtherType is set to 0x0806:

Frame 2: 64 bytes on wire (512 bits), 64 bytes captured (512 bits)

Ethernet II, Src: 52:54:00:0a:78:a2, Dst: ff:ff:ff:ff:ff:ff

Destination: ff:ff:ff:ff:ff:ff

Source: 52:54:00:0a:78:a2

Type: 802.1Q Virtual LAN (0x8100)

802.1Q Virtual LAN, PRI: 0, DEI: 0, ID: 200

000. .... .... .... = Priority: Best Effort (default) (0)

...0 .... .... .... = DEI: Ineligible

.... 0000 1100 1000 = ID: 200

Type: ARP (0x0806)

Padding: 0000000000000000000000000000

Trailer: 00000000

Address Resolution Protocol (reply/gratuitous ARP)

Now we understand that from a switch perspective, operating at L2, the only thing that is different with a tagged frame vs untagged frame is the value of 0x8100 vs for example 0x0800 and that the frame will be four bytes larger.

What should then an unmanaged switch (device that doesn’t do 802.1Q) do with a 802.1Q-tagged frame? It should forward it! Without modifying the frame! Even for a device that doesn’t understand 802.1Q, this is a perfectly valid frame. The frame just has an value in the EtherType field (TPID but the switch wouldn’t know this) that it doesn’t recognize. That does not warrant dropping the frame, or even worse, modifying it.

Let’s take another example. What if you implemented IPv6 and had an EtherType of 0x86DD. Should a switch drop frames with this EtherType just because it doesn’t support IPv6? That would be absurd! A switch should not concern itself with the payload of the frame. It should just bridge the frame based on the MAC address table.

Now we understand that the reasonable thing to do for a VLAN-unaware switch is to forward the frame. The only acceptable exception is dropping a full-sized frame that is now 1522 bytes instead of 1518 bytes as the frame would exceed the MTU of the interface.

Note that things could still break if you have a VLAN-unaware switch. For example, what if you have the same MAC address in two different VLANs? The VLAN-unaware switch can’t handle this properly as it’s not VLAN-aware.

While we understand that the reasonable thing to do is to forward the frame, this is not always how things go in real life. Some VLAN-unaware switches will forward the frame intact, some will drop the frame, or some may even remove the 802.1Q header! All bets are off!

In this post we learned how 802.1Q was able to introduce VLAN tagging “transparently” by having the TPID field be the same format as EtherType. Switches should bridge frames based on destination MAC address and not be concerned with what the payload is. VLAN-unaware switches should in theory be transparent to 802.1Q-tagged frames, but not all devices operate like this.

The cheap home switches that I’ve tried over the years all forward VLAN tagged frames without trouble.

Also note that IEEE 802.3as-2006 extends the Ethernet maximum frame size to 2000 bytes (net 1982 after accounting for the header and CRC). (Although this is not widely known by the web because IEEE standards documents aren’t freely available.) So probably the majority of Ethernet hardware in operation today has no issues with 1522-byte frames with a VLAN header.

Interesting! I haven’t checked when jumbo frames were first mentioned. Many networks implement 9000 bytes although it seems the returns are diminishing.

I’m pretty sure jumboframes entered the fray along with Gigabit Ethernet. That was around 2000 or 2001 or so and CPUs definitely had a hard time keeping up with more than 80 thousand packets per second. (Or the PCI bus keeping up with the amount of data coming and/or going out, for that matter. Yes, I was pushing the envelope back then.)

More recently, this is no longer a problem with super fast busses and CPUs, and also, NICs got more advanced and offered all kinds of offloading features, including accepting a 64k or so IP packet and then splitting it up into 1500-byte packets: https://en.wikipedia.org/wiki/TCP_offload_engine

But… it’s now not uncommon to use devices with performance limitations, such as NASes ans Raspberry Pis. I recently found a new Realtek driver for my Synology that lets me use a 2.5 Gbps USB Ethernet dongle, and it turned out that it struggled to provide more performance than the regular 10/100/1000 Mbps NIC… until I enabled 9000-byte jumboframes. Now that’s a NAS from 2018 so perhaps this doesn’t mean much, but still.

So I decided to check on my Raspberry Pi 400. (Pi 4 variant.) That one didn’t need new drivers but ran iperf3 at 2.32 Gbps consistently, no jumboframes required.

Still, in a datacenter I’d definitely use jumboframes to save some CPU cycles = electricity and also TCP ramps up to high speeds quicker because that happens in packet increments.

Note that according to:

Jain, R., “Error Characteristics of Fiber Distributed Data

Interface (FDDI), IEEE Transactions on Communications”,

August 1990.

The FDDI/Ethernet CRC has a Hamming distance of 4 for packets between 375 and 11453 bytes (including). The Hamming distance reduces as packets get larger than that. This means that the CRC protects against up to 3 flipped bits in a packet 100% of the time when you stick to ~ 11k or smaller packets. As packets get larger, there are more bits to flip so you don’t want to go overboard in the absence of a 64-bit CRC.

Then again, my 10 Gbps Mac Mini will do 16000-byte jumboframes and I’ve seen equipment that will do 64000-byte packets.

Pingback:Weekend Reads 071224 – rule 11 reader

I have encountered this issue when a user connected their home switch (TP-Link) to our Aruba network.

A real mess.

Keep up the blogging, love your blog.

Thanks!