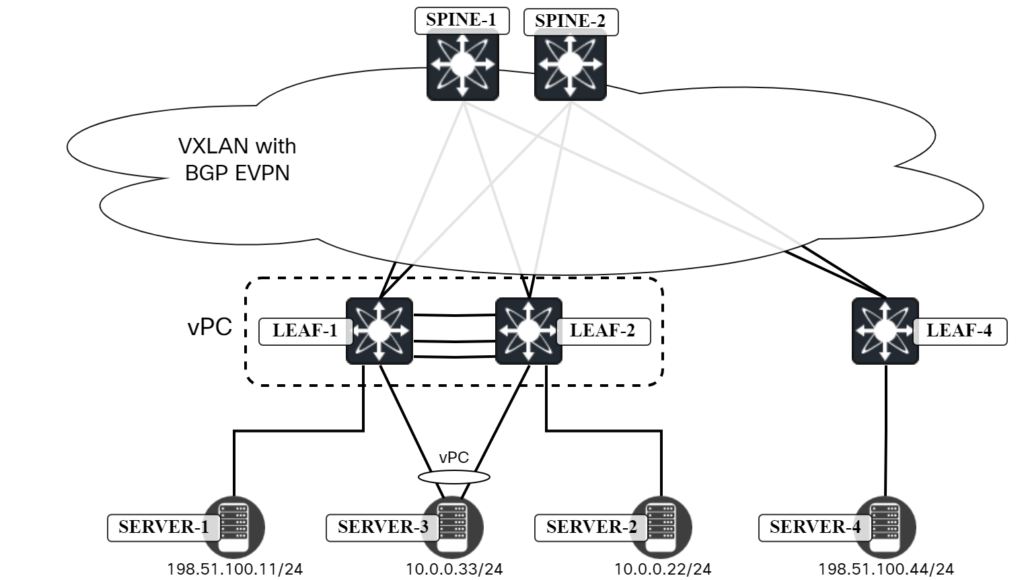

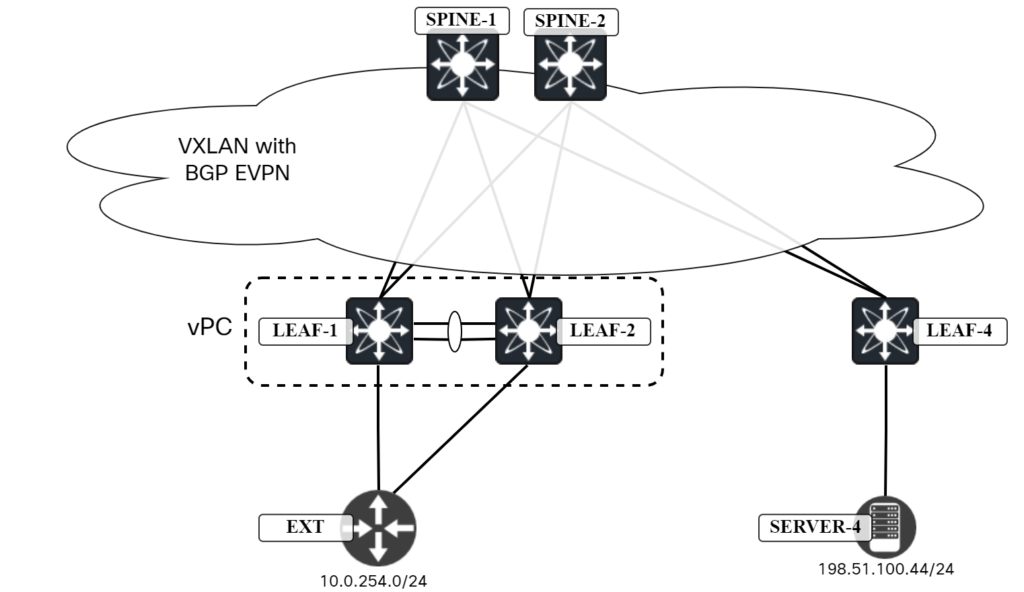

Like I hinted at in an earlier post, there are a some failure scenarios you need to consider for vPC. The first scenario we can’t really do much with, but I’ll describe it anyway. The topology is the one below:

Server4 needs to send a packet to Server1. Leaf4 has the following routes for 198.51.100.11:

Leaf4# show bgp l2vpn evpn 198.51.100.11

BGP routing table information for VRF default, address family L2VPN EVPN

Route Distinguisher: 192.0.2.3:32777

BGP routing table entry for [2]:[0]:[0]:[48]:[0050.56ad.8506]:[32]:[198.51.100.11]/272, version 13677

Paths: (2 available, best #2)

Flags: (0x000202) (high32 00000000) on xmit-list, is not in l2rib/evpn, is not in HW

Path type: internal, path is valid, not best reason: Neighbor Address, no labeled nexthop

AS-Path: NONE, path sourced internal to AS

203.0.113.12 (metric 81) from 192.0.2.12 (192.0.2.2)

Origin IGP, MED not set, localpref 100, weight 0

Received label 10000 10001

Extcommunity: RT:65000:10000 RT:65000:10001 SOO:203.0.113.12:0 ENCAP:8

Router MAC:00ad.e688.1b08

Originator: 192.0.2.3 Cluster list: 192.0.2.2

Advertised path-id 1

Path type: internal, path is valid, is best path, no labeled nexthop

Imported to 3 destination(s)

Imported paths list: Tenant1 L3-10001 L2-10000

AS-Path: NONE, path sourced internal to AS

203.0.113.12 (metric 81) from 192.0.2.11 (192.0.2.1)

Origin IGP, MED not set, localpref 100, weight 0

Received label 10000 10001

Extcommunity: RT:65000:10000 RT:65000:10001 SOO:203.0.113.12:0 ENCAP:8

Router MAC:00ad.e688.1b08

Originator: 192.0.2.3 Cluster list: 192.0.2.1

Path-id 1 not advertised to any peer

Route Distinguisher: 192.0.2.4:32777

BGP routing table entry for [2]:[0]:[0]:[48]:[0050.56ad.8506]:[32]:[198.51.100.11]/272, version 13680

Paths: (2 available, best #1)

Flags: (0x000202) (high32 00000000) on xmit-list, is not in l2rib/evpn, is not in HW

Advertised path-id 1

Path type: internal, path is valid, is best path, no labeled nexthop

Imported to 3 destination(s)

Imported paths list: Tenant1 L3-10001 L2-10000

AS-Path: NONE, path sourced internal to AS

203.0.113.12 (metric 81) from 192.0.2.11 (192.0.2.1)

Origin IGP, MED not set, localpref 100, weight 0

Received label 10000 10001

Extcommunity: RT:65000:10000 RT:65000:10001 SOO:203.0.113.12:0 ENCAP:8

Router MAC:00ad.f3bb.1b08

Originator: 192.0.2.4 Cluster list: 192.0.2.1

Path type: internal, path is valid, not best reason: Neighbor Address, no labeled nexthop

AS-Path: NONE, path sourced internal to AS

203.0.113.12 (metric 81) from 192.0.2.12 (192.0.2.2)

Origin IGP, MED not set, localpref 100, weight 0

Received label 10000 10001

Extcommunity: RT:65000:10000 RT:65000:10001 SOO:203.0.113.12:0 ENCAP:8

Router MAC:00ad.f3bb.1b08

Originator: 192.0.2.4 Cluster list: 192.0.2.2

Path-id 1 not advertised to any peer

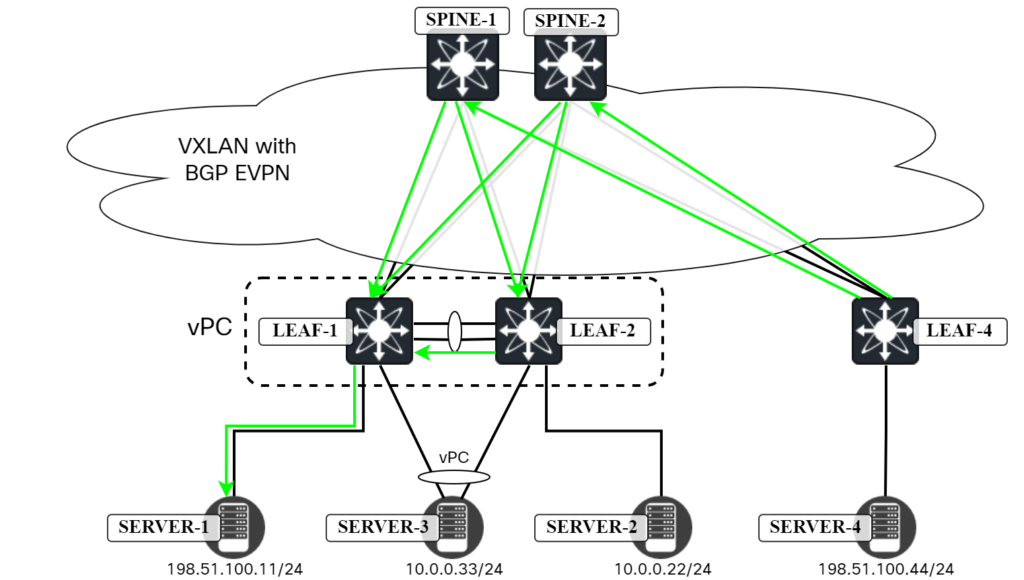

Both Leaf1 and Leaf2 are advertising the RT2, even though physically Server1 connects to Leaf1. As the next-hop is 203.0.113.12, the packet can arrive at both Leaf1 and Leaf2. This is shown below:

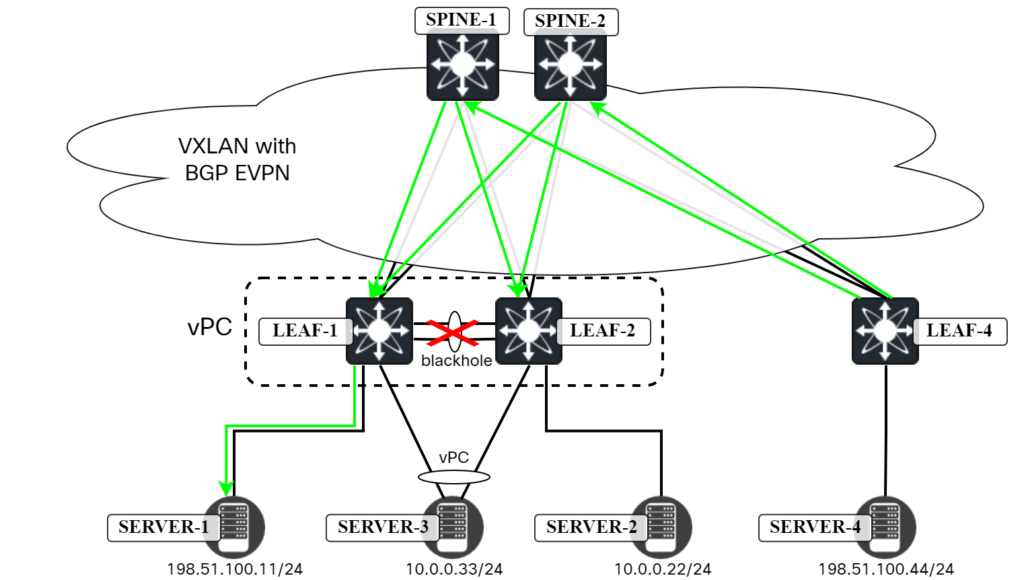

Now, what would happen if the peer link between Leaf1 and Leaf2 is down? It would depend on your vPC configuration if the orphan ports (Server1 and Server2) remain up, but for now let’s assume that they do. With the peer link down, some packets would still be going to Leaf2 which would then have to blackhole them as the peer link is down. This is shown below:

When running a ping from Server4, the packets that go to Leaf2 will be dropped:

server4:~$ ping 198.51.100.11 PING 198.51.100.11 (198.51.100.11) 56(84) bytes of data. 64 bytes from 198.51.100.11: icmp_seq=1 ttl=64 time=5.20 ms 64 bytes from 198.51.100.11: icmp_seq=2 ttl=64 time=5.03 ms 64 bytes from 198.51.100.11: icmp_seq=3 ttl=64 time=5.75 ms 64 bytes from 198.51.100.11: icmp_seq=4 ttl=64 time=5.77 ms 64 bytes from 198.51.100.11: icmp_seq=5 ttl=64 time=5.59 ms 64 bytes from 198.51.100.11: icmp_seq=6 ttl=64 time=5.83 ms 64 bytes from 198.51.100.11: icmp_seq=7 ttl=64 time=5.52 ms 64 bytes from 198.51.100.11: icmp_seq=12 ttl=64 time=5.36 ms 64 bytes from 198.51.100.11: icmp_seq=20 ttl=64 time=5.84 ms 64 bytes from 198.51.100.11: icmp_seq=22 ttl=64 time=4.50 ms 64 bytes from 198.51.100.11: icmp_seq=23 ttl=64 time=4.90 ms 64 bytes from 198.51.100.11: icmp_seq=24 ttl=64 time=4.70 ms 64 bytes from 198.51.100.11: icmp_seq=25 ttl=64 time=4.41 ms 64 bytes from 198.51.100.11: icmp_seq=26 ttl=64 time=4.42 ms 64 bytes from 198.51.100.11: icmp_seq=29 ttl=64 time=5.55 ms 64 bytes from 198.51.100.11: icmp_seq=31 ttl=64 time=4.62 ms 64 bytes from 198.51.100.11: icmp_seq=33 ttl=64 time=4.94 ms 64 bytes from 198.51.100.11: icmp_seq=34 ttl=64 time=5.26 ms

Note that in a real physical environment, most likely either all the packets would make it or be dropped as my virtual lab does load sharing differently due to how Nexus9000v has implemented VXLAN. The important point is that for devices that are only connected to one leaf, we could end up with black holing scenarios. There’s not much we can do than to have a reliable peer link.

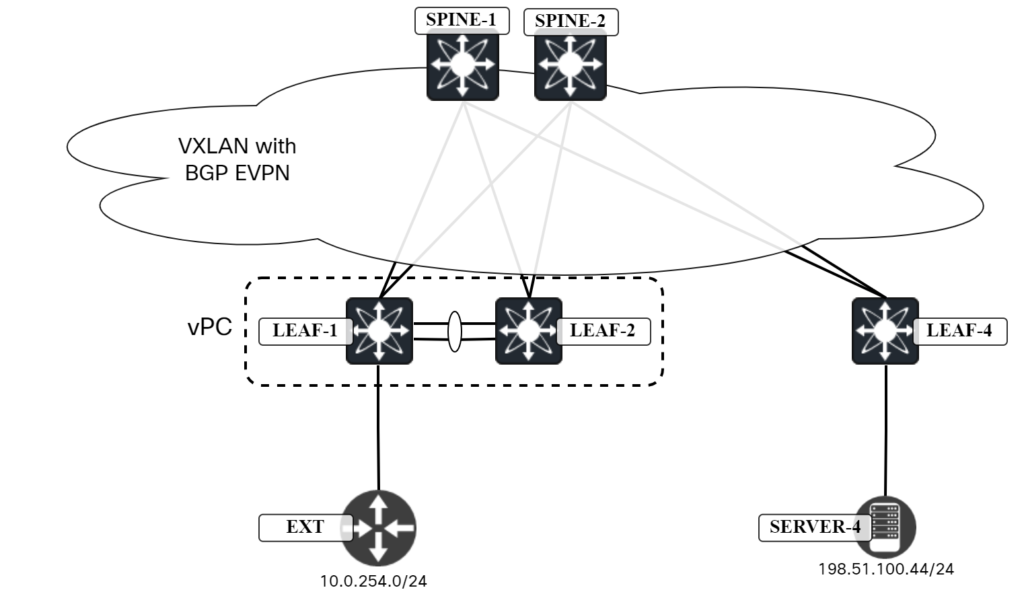

There is another scenario though involving RT5. In this scenario there is an external network connected only to Leaf1 as shown below:

Leaf1 is learning this route via BGP:

Leaf1# show bgp vrf Tenant1 ipv4 unicast 10.0.254.0/24

BGP routing table information for VRF Tenant1, address family IPv4 Unicast

BGP routing table entry for 10.0.254.0/24, version 25

Paths: (1 available, best #1)

Flags: (0x880c001a) (high32 0x000020) on xmit-list, is in urib, is best urib route, is in HW, exported

vpn: version 23, (0x00000000100002) on xmit-list

Advertised path-id 1, VPN AF advertised path-id 1

Path type: external, path is valid, is best path, no labeled nexthop, in rib, is extd

AS-Path: 64512 , path sourced external to AS

10.0.100.1 (metric 0) from 10.0.100.1 (192.168.128.223)

Origin IGP, MED 0, localpref 100, weight 0

Extcommunity: RT:65000:10001

VRF advertise information:

Path-id 1 not advertised to any peer

VPN AF advertise information:

Path-id 1 not advertised to any peer

Now, when Leaf1 advertises this route into EVPN, it is going to set the next-hop of anycast VTEP (203.0.113.12):

Leaf4# show bgp l2vpn evpn 10.0.254.0

BGP routing table information for VRF default, address family L2VPN EVPN

Route Distinguisher: 192.0.2.3:3

BGP routing table entry for [5]:[0]:[0]:[24]:[10.0.254.0]/224, version 14499

Paths: (2 available, best #2)

Flags: (0x000002) (high32 00000000) on xmit-list, is not in l2rib/evpn, is not in HW

Path type: internal, path is valid, not best reason: Neighbor Address, no labeled nexthop

Gateway IP: 0.0.0.0

AS-Path: 64512 , path sourced external to AS

203.0.113.12 (metric 81) from 192.0.2.12 (192.0.2.2)

Origin IGP, MED 0, localpref 100, weight 0

Received label 10001

Extcommunity: RT:65000:10001 ENCAP:8 Router MAC:00ad.e688.1b08

Originator: 192.0.2.3 Cluster list: 192.0.2.2

Advertised path-id 1

Path type: internal, path is valid, is best path, no labeled nexthop

Imported to 2 destination(s)

Imported paths list: Tenant1 L3-10001

Gateway IP: 0.0.0.0

AS-Path: 64512 , path sourced external to AS

203.0.113.12 (metric 81) from 192.0.2.11 (192.0.2.1)

Origin IGP, MED 0, localpref 100, weight 0

Received label 10001

Extcommunity: RT:65000:10001 ENCAP:8 Router MAC:00ad.e688.1b08

Originator: 192.0.2.3 Cluster list: 192.0.2.1

Path-id 1 not advertised to any peer

What problem will this cause? Well, Leaf2 is using 203.0.113.12, but it has no route for 10.0.254.0/24:

Leaf2# show bgp l2vpn evpn 10.0.254.0 BGP routing table information for VRF default, address family L2VPN EVPN Leaf2# show ip route 10.0.254.0/24 vrf Tenant1 IP Route Table for VRF "Tenant1" '*' denotes best ucast next-hop '**' denotes best mcast next-hop '[x/y]' denotes [preference/metric] '%<string>' in via output denotes VRF <string> Route not found

Remember, vPC is a L2 technology so no L3 information (beyond ARP) is sent via CFS. The BGP route originated by Leaf1 can’t be installed as SOO prevents it, and even if it were to be installed, it would point towards an IP that belongs to Leaf2 itself.

Let’s try to ping 10.0.254.1 from Server4:

server4:~$ ping 10.0.254.1 PING 10.0.254.1 (10.0.254.1) 56(84) bytes of data. 64 bytes from 10.0.254.1: icmp_seq=1 ttl=253 time=6.37 ms 64 bytes from 10.0.254.1: icmp_seq=3 ttl=253 time=6.38 ms 64 bytes from 10.0.254.1: icmp_seq=6 ttl=253 time=5.48 ms 64 bytes from 10.0.254.1: icmp_seq=8 ttl=253 time=6.02 ms 64 bytes from 10.0.254.1: icmp_seq=9 ttl=253 time=7.43 ms ^C --- 10.0.254.1 ping statistics --- 9 packets transmitted, 5 received, 44.4444% packet loss, time 8077ms rtt min/avg/max/mdev = 5.480/6.334/7.428/0.637 ms

Some packets make it, and others not, depending on if traffic goes via Leaf2 or not. Once again, in a physical environment the load sharing would look different. Most likely either all packets would make it or none.

Is there something we can do to resolve the scenario with RT5? There is! There are actually three options:

- Connect external networks to both leaf switches in the vPC pair.

- Setup routing between the two leafs.

- Advertise the prefix with the primary IP of NVE instead of anycast IP.

I will briefly cover the first two before showing the third option. The first option is to, as you should always strive for with vPC, connect to both leafs in the vPC pair:

As the external network is now connected to Leaf2 as well, it will be able to route towards it.

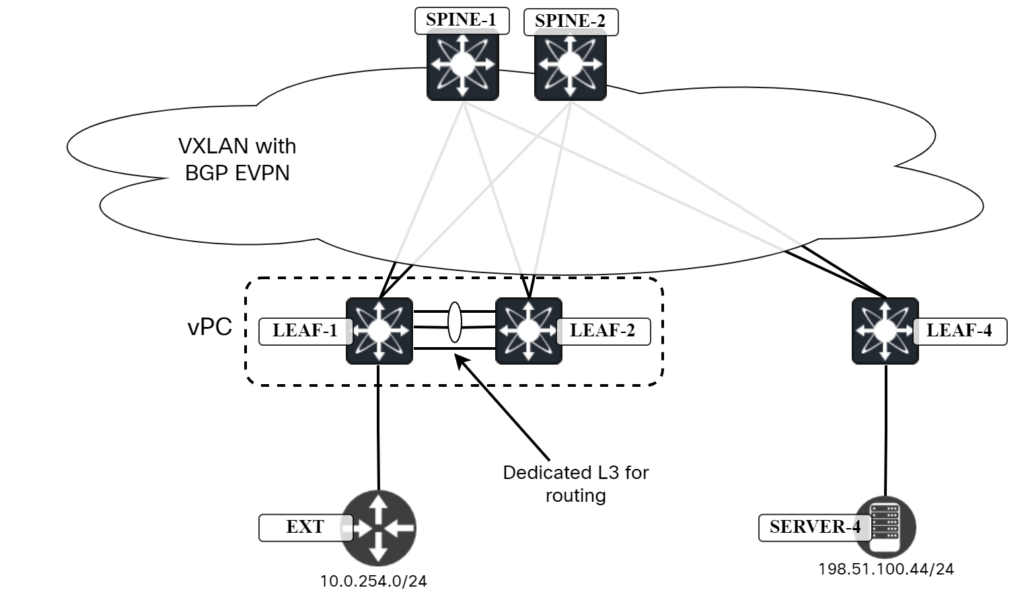

If it’s not possible to connect to both the leaf switches, then another option is to setup routing between the two leafs. A dedicated L3 interface (non-vPC) will be needed between the two leafs:

Configure a routing protocol between the two leafs, for example BGP. There would need to be one L3 interface for every VRF, likely using subinterfaces. As the L3 interface becomes a critical path between the two leafs, it may be desirable to have two interfaces between the leafs.

Now, for the option of advertising prefixes in EVPN with the primary IP, let’s first look at what the prefixes look like now. I’ve done some filtering to show the most important information:

Leaf4# show bgp l2vpn evpn 198.51.100.11 | i 203.0.113.12|MAC|Originator

203.0.113.12 (metric 81) from 192.0.2.11 (192.0.2.1)

Extcommunity: RT:65000:10000 RT:65000:10001 SOO:203.0.113.12:0 ENCAP:8

Router MAC:00ad.e688.1b08

Originator: 192.0.2.3 Cluster list: 192.0.2.1

203.0.113.12 (metric 81) from 192.0.2.12 (192.0.2.2)

Extcommunity: RT:65000:10000 RT:65000:10001 SOO:203.0.113.12:0 ENCAP:8

Router MAC:00ad.e688.1b08

Originator: 192.0.2.3 Cluster list: 192.0.2.2

203.0.113.12 (metric 81) from 192.0.2.12 (192.0.2.2)

Extcommunity: RT:65000:10000 RT:65000:10001 SOO:203.0.113.12:0 ENCAP:8

Router MAC:00ad.f3bb.1b08

Originator: 192.0.2.4 Cluster list: 192.0.2.2

203.0.113.12 (metric 81) from 192.0.2.11 (192.0.2.1)

Extcommunity: RT:65000:10000 RT:65000:10001 SOO:203.0.113.12:0 ENCAP:8

Router MAC:00ad.f3bb.1b08

Originator: 192.0.2.4 Cluster list: 192.0.2.1

Leaf1 advertises with a RMAC of 00ad.e688.1b08 and Leaf2 advertises with a RMAC of 00ad.f3bb.1b08. These are RT2. Let’s take a look at the RT5 from Leaf1:

Leaf4# show bgp l2vpn evpn 10.0.254.0

BGP routing table information for VRF default, address family L2VPN EVPN

Route Distinguisher: 192.0.2.3:3

BGP routing table entry for [5]:[0]:[0]:[24]:[10.0.254.0]/224, version 14499

Paths: (2 available, best #2)

Flags: (0x000002) (high32 00000000) on xmit-list, is not in l2rib/evpn, is not in HW

Path type: internal, path is valid, not best reason: Neighbor Address, no labeled nexthop

Gateway IP: 0.0.0.0

AS-Path: 64512 , path sourced external to AS

203.0.113.12 (metric 81) from 192.0.2.12 (192.0.2.2)

Origin IGP, MED 0, localpref 100, weight 0

Received label 10001

Extcommunity: RT:65000:10001 ENCAP:8 Router MAC:00ad.e688.1b08

Originator: 192.0.2.3 Cluster list: 192.0.2.2

Advertised path-id 1

Path type: internal, path is valid, is best path, no labeled nexthop

Imported to 2 destination(s)

Imported paths list: Tenant1 L3-10001

Gateway IP: 0.0.0.0

AS-Path: 64512 , path sourced external to AS

203.0.113.12 (metric 81) from 192.0.2.11 (192.0.2.1)

Origin IGP, MED 0, localpref 100, weight 0

Received label 10001

Extcommunity: RT:65000:10001 ENCAP:8 Router MAC:00ad.e688.1b08

Originator: 192.0.2.3 Cluster list: 192.0.2.1

Path-id 1 not advertised to any peer

Now, let’s enable advertising RT5 with primary IP on Leaf1 and Leaf2. This is done using the following commands:

router bgp 65000

address-family l2vpn evpn

advertise-pip

!

interface nve1

advertise virtual-rmac

Now let’s take a look what happened to the RT2:

Leaf4# show bgp l2vpn evpn 198.51.100.11 | i 203.0.113.12|MAC|Originator

203.0.113.12 (metric 81) from 192.0.2.11 (192.0.2.1)

Extcommunity: RT:65000:10000 RT:65000:10001 SOO:203.0.113.12:0 ENCAP:8

Router MAC:0200.cb00.710c

Originator: 192.0.2.3 Cluster list: 192.0.2.1

203.0.113.12 (metric 81) from 192.0.2.12 (192.0.2.2)

Extcommunity: RT:65000:10000 RT:65000:10001 SOO:203.0.113.12:0 ENCAP:8

Router MAC:0200.cb00.710c

Originator: 192.0.2.3 Cluster list: 192.0.2.2

203.0.113.12 (metric 81) from 192.0.2.12 (192.0.2.2)

Extcommunity: RT:65000:10000 RT:65000:10001 SOO:203.0.113.12:0 ENCAP:8

Router MAC:0200.cb00.710c

Originator: 192.0.2.4 Cluster list: 192.0.2.2

203.0.113.12 (metric 81) from 192.0.2.11 (192.0.2.1)

Extcommunity: RT:65000:10000 RT:65000:10001 SOO:203.0.113.12:0 ENCAP:8

Router MAC:0200.cb00.710c

Originator: 192.0.2.4 Cluster list: 192.0.2.1

The next-hop is still the anycast IP of 203.0.113.12, but the RMAC is now 0200.cb00.710c, which is a virtual RMAC that consists of:

- 0200.

- The anycast IP encoded in hex.

Let’s convert the hex to binary and then to decimal to make sure we have the correct IP:

- c – 1100.

- b – 1011.

- 0 – 0000.

- 0 – 0000.

- 7 – 0111.

- 1 – 0001.

- 0 – 0000.

- c – 1100.

- 11001011 – 203.

- 00000000 – 0.

- 01110001 – 113.

- 00001100 – 12.

203.0.113.12. It checks out!

What happened to the RT5 that was advertised?

Leaf4# show bgp l2vpn evpn 10.0.254.0

BGP routing table information for VRF default, address family L2VPN EVPN

Route Distinguisher: 192.0.2.3:3

BGP routing table entry for [5]:[0]:[0]:[24]:[10.0.254.0]/224, version 15013

Paths: (2 available, best #2)

Flags: (0x000002) (high32 00000000) on xmit-list, is not in l2rib/evpn, is not in HW

Path type: internal, path is valid, not best reason: Neighbor Address, no labeled nexthop

Gateway IP: 0.0.0.0

AS-Path: 64512 , path sourced external to AS

203.0.113.1 (metric 81) from 192.0.2.12 (192.0.2.2)

Origin IGP, MED 0, localpref 100, weight 0

Received label 10001

Extcommunity: RT:65000:10001 ENCAP:8 Router MAC:00ad.e688.1b08

Originator: 192.0.2.3 Cluster list: 192.0.2.2

Advertised path-id 1

Path type: internal, path is valid, is best path, no labeled nexthop

Imported to 2 destination(s)

Imported paths list: Tenant1 L3-10001

Gateway IP: 0.0.0.0

AS-Path: 64512 , path sourced external to AS

203.0.113.1 (metric 81) from 192.0.2.11 (192.0.2.1)

Origin IGP, MED 0, localpref 100, weight 0

Received label 10001

Extcommunity: RT:65000:10001 ENCAP:8 Router MAC:00ad.e688.1b08

Originator: 192.0.2.3 Cluster list: 192.0.2.1

Path-id 1 not advertised to any peer

The next-hop now points to 203.0.113.1, which is the primary IP of Leaf1. Also note the RMAC of 00ad.e688.1b08, which is the system RMAC of Leaf1. It should now be possible to ping the external destination without losing any packets:

server4:~$ ping 10.0.254.1 PING 10.0.254.1 (10.0.254.1) 56(84) bytes of data. 64 bytes from 10.0.254.1: icmp_seq=1 ttl=253 time=5.73 ms 64 bytes from 10.0.254.1: icmp_seq=2 ttl=253 time=5.81 ms 64 bytes from 10.0.254.1: icmp_seq=3 ttl=253 time=5.39 ms 64 bytes from 10.0.254.1: icmp_seq=4 ttl=253 time=6.25 ms 64 bytes from 10.0.254.1: icmp_seq=5 ttl=253 time=5.33 ms ^C --- 10.0.254.1 ping statistics --- 5 packets transmitted, 5 received, 0% packet loss, time 4005ms rtt min/avg/max/mdev = 5.331/5.701/6.248/0.330 ms

This is now working! Configuring advertise-pip and advertise virtual-rmac does the following:

- RT2 advertised with anycast IP and virtual RMAC.

- RT5 advertised with primary IP and system RMAC.

In the next post, we’ll take a look at some enhancements and best practices for vPC in VXLAN/EVPN topology.